Model the data

- By Daniil Maslyuk

- 11/5/2022

- Skill 2.1: Design a data model

- Skill 2.2: Develop a data model

- Skill 2.3: Create model calculations by using DAX

- Skill 2.4: Optimize model performance

- Chapter summary

- Thought experiment

- Thought experiment answers

Skill 2.4: Optimize model performance

Sometimes after you create the first version of your data model, you may realize that it doesn’t perform well enough. Because of the way Power BI stores data, it may mean that your data model isn’t performing as efficiently as it can. In this section, we review the skills necessary to optimize a model’s performance and learn how you can identify measures, visuals, and relationships that are slow.

When working with imported data in Power BI, keep in mind that it’s a columnstore database, which means that the number of distinct values in a column—also known as cardinality—usually plays a more important role than the number of rows. Therefore, one way to address poor performance is to reduce cardinality levels, which you can do by changing data types or summarizing data.

Remove unnecessary rows and columns

In Power BI, it’s preferable to only load data that is necessary for reporting and then add more data later as required. In practice, you should disable loading of queries that aren’t needed for reporting and filter the data to only the required rows and columns before loading into the model.

Remove unnecessary rows

Reducing the number of rows requires some filtering criteria, which can be based on attributes or dates.

For example, instead of loading all Wide World Importers data, you could load data for a specific sales territory if you’re only interested in analyzing that specific sales territory. You can use parameters when filtering to make the process more manageable; this approach will also make it possible to change filters once the dataset is published to the Power BI service.

You can also filter by dates and only load some recent data in case you’re not interested in historical data. In addition to parameters, you can apply relative date filters, such as “is in the previous 2 years.”

Filtering rows after you create reports won’t break any visuals in the existing reports.

Remove unnecessary columns

Columns in a data model usually serve at least one of two purposes: they could be used to support visuals or calculations, or both. It’s preferable to not load columns that aren’t used for any purpose, especially if they’ve got a high number of distinct values.

Some data warehouses include primary keys for fact tables. Although that may be useful for data audit purposes, you should remove primary keys from Power BI data models because they have a unique value for every row, and fact tables can be long. Primary keys of fact tables can occupy over 50% of data model size without bringing any benefit. In the Wide World Importers example, removing the Sale Key column from the Sale table reduces the file size by 43%.

If you need to count the number of rows in a fact table, it’s more efficient to use COUNTROWS than DISTINCTCOUNT of the primary key column.

Removing columns that are used in visuals or calculations is going to break existing reports or even the dataset. You can use the Remove Other Columns functionality in Power Query Editor to have a step to refer to if you need to add a column to your model later. This step will also prevent columns added to the dataset from being automatically brought into your model, such as a new column added to a SQL table by a database administrator.

Identify poorly performing measures, relationships, and visuals

Sometimes you may notice that the report performance is not optimal. Power BI Desktop has a feature called Performance Analyzer, which you can use to trace the slow-performing visuals and to see the DAX queries behind them.

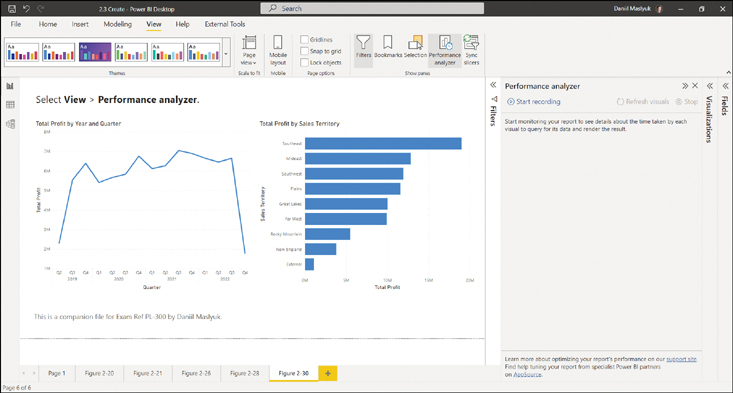

To turn on Performance Analyzer, go to the Report view and select View > Performance analyzer. This opens the Performance Analyzer pane shown in Figure 2-30.

FIGURE 2.30 Performance Analyzer

Performance Analyzer works by recording traces, and it then shows you how long each visual took to render. To start recording traces, select Start recording. After that, you need to perform some actions, such as applying filters, that will recalculate the visuals, or you can select Refresh visuals to refresh the visuals as they are. You’ll then see the rendering duration for each visual.

To identify the slowest visuals, you can sort visuals in the Performance Analyzer pane by selecting the arrow next to Duration (ms).

Each visual that contains data has a DAX query behind it, which you can copy by expanding the line of the visual in the Performance Analyzer pane and selecting Copy query. You can analyze the query further in DAX Studio, for example. It’s also possible to export all traces by selecting Export.

To clear the Performance Analyzer pane, select Clear. Once you’re done recording traces, select Stop.

Reduce cardinality levels to improve performance

Power BI employs several compression mechanisms to reduce the size of data, the details of which are outside the scope of this book. One way to decrease the data size, which we cover next, is by reducing the cardinality of columns by changing data types or the default summarization.

Changing data types

In Power BI, two data types can be used for decimal numbers:

Decimal number Can store more than four decimal places

Fixed decimal number Can only store up to four decimal places

If your data contains more than four decimal places for some values and you don’t need that level of precision, you should change the data type to Fixed Decimal Number to save space.

Another way to change the cardinality levels is to split decimal number columns into pairs of whole numbers and decimal numbers, which should be done as close to the data source as possible. Whole numbers can be of any range, whereas decimal numbers should be between 0 and 1. These two columns can then be aggregated by using SUMX in the following fashion:

Full number =

SUMX(

'Fact table',

'Fact table'[Whole number] + 'Fact table'[Decimal number]

)

Although you’ll get two columns instead of one, in many cases you’ll see improvements in cardinality levels and, as a result, a decrease in the data model size. For the same reasons, in Power BI it’s best practice to split Date/Time columns with Time components into two: Date and Time. This is because you are increasing the number of duplicates in each column, and therefore the column is more efficiently stored in memory.

Some text columns, such as invoice numbers that are stored as text, can also sometimes be reduced in size. For example, if your fact table contains a column with invoice numbers, which always have the INV prefix and eight numbers that follow it, such as INV01234567, you can remove the INV prefix and change the data type of numbers from Text to Whole Number. If the prefix is inconsistent, you can split it and move it to a different column. This is because storing whole numbers is usually more efficient than storing text.

Summarizing data

If your source data provides a level of detail that’s not required by reporting, then you may want to consider summarizing your data to reduce cardinality.

For example, if the source data contains daily sales information but you only report monthly values, you may want to summarize your sales data to be at the month level instead of the day level. This approach will reduce the size of your model dramatically, though it will make the reporting of daily data impossible.

It’s preferable to summarize your data as close to the data source as possible. Power Query also allows you to summarize data by using the Group By functionality on the Transform ribbon.

Data summarization involves a trade-off between data model size and the available level of detail; whether you should summarize data depends on your business requirements.

Exam Tip

Exam Tip