Manage high availability and disaster recovery

- By Victor Isakov

- 11/7/2017

Skill 4.3: Implement log shipping

Log shipping is typically used for disaster recovery scenarios. It can, however, also be used to synchronize data warehouses and scale out a reporting solution. Although log shipping has always been possible with SQL Server, it was introduced as a supported feature with the release of SQL Server 2000, which includes an interface and a number of system tables in the Microsoft system database.

This objective covers how to:

Architect log shipping

Configure log shipping

Monitor log shipping

Architect log shipping

Log shipping has a very simple architecture. It uses a number of SQL Server Agent jobs. When architecting a log shipping solution, make sure you get the schedules for the various log shipping jobs scheduled at the right time. Depending on your RTO and RPO you typically need to let preceding jobs complete before running the next job. Don’t forget to revisit your schedules periodically as your database might have grown in size and consequently the jobs take longer to run. As always, it is important to get the security for all the scheduled jobs correct, especially because you are running the jobs on different servers.

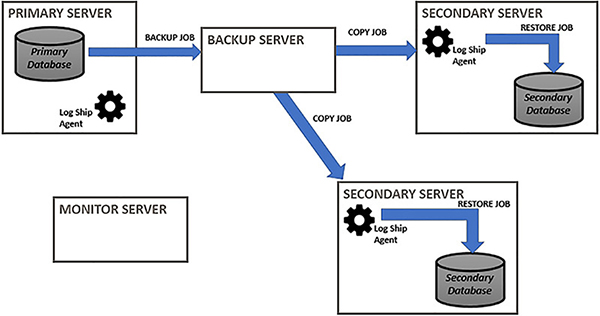

The log shipping architecture, shown in Figure 4-1, contains the following elements:

Primary server The primary server contains the database that is going to be log shipped. There can only be one primary server.

Primary database The primary database is the source database on the primary server that is log shipped. The database can’t be using the SIMPLE recovery model.

Secondary server The secondary server contains the copy of the primary database that is periodically updated through log shipping. There can be multiple secondary servers. The secondary server can be on a higher version of SQL Server from the primary server.

Secondary database The secondary database is a copy of the primary database that is hosted on the primary server. The secondary database can be potentially used for a reporting purpose (SELECT queries).

Monitor server The monitor server is a separate SQL Server instance that monitors the overall state of the log shipping solution. A monitor server is optional.

Backup job The backup job is a SQL Server Agent job that performs the backup job periodically and logs history to the local and monitor server. The backup job runs on the primary server.

Copy job The copy job is a SQL Server Agent job that performs the backup job periodically and logs history to the local and monitor server. The copy job runs on the secondary server.

Restore job The restore job is a SQL Server Agent job that performs the restore job periodically and logs history to the local and monitor server. It also deletes old file and history. The restore job runs on the secondary server.

Alert job The altert job is a SQL Server Agent job that generates an alert whenever a log shipping error occurs. The alert job runs on the monitor server.

Log ship agent The log ship agent is a process (sqllogship.exe) that is invoked by the SQL Server Agent jobs to perform the log shipping jobs.

FIGURE 4-1 Log shipping architecture

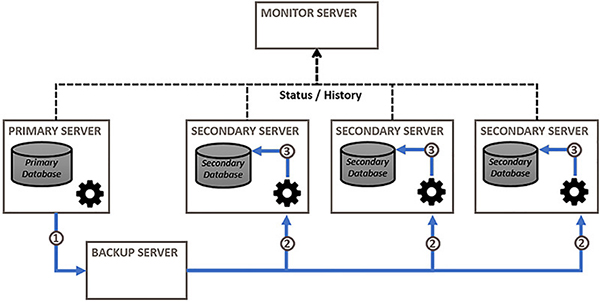

Log shipping works by scheduling a number of jobs via the SQL Server Agent. The core jobs include:

Performing a transaction log backup locally on the primary server. The backup destination can be local or a UNC path.

Copying the log backup to a secondary server. Multiple secondary servers are possible.

Restoring the log backup on the secondary server. The database on the secondary server can be left in the NORECOVERY or STANDBY state. Under the STANDBY state users will be able to perform SELECT queries against the log shipped database.

Figure 4-2 shows the high-level architecture of a log shipping solution with the previous three steps being performed.

FIGURE 4-2 Log shipping steps

The pros of using log shipping include:

Support for any edition of SQL Server.

Scope of protection is at the database level.

Users can potentially query the secondary database, thereby offloading reporting queries from the primary database.

Support for multiple secondary servers.

Support for a delay between changes made to the primary database and the secondary database. This can be important for disaster recovery where an organization might want to protect against accidental deletion of dataset.

Data changes to the secondary database can be scheduled at a frequency appropriate to the business.

The cons of using log shipping include:

There is no automatic fail over.

Manual failover is more complicated than other high-availability technologies.

Users can’t query the database while a transaction log is being restored.

Data loss is possible. For example, if the primary server or primary database fails, and you cannot access the orphaned log transactions, data will be lost.

Log shipped databases have to use the full recovery model.

Log shipping will impact your backup strategy. You will need to re-design your backup strategy so as to use log shipping’s log backups instead of your own. If you perform log backup outside of log shipping it will break the log-chain and log shipping will start failing.

A break in the log backup-chain will break log shipping. The log backup-chain can be broken by changing the database to a SIMPLE recovery model, or by performing a log backup outside of log shipping.

Log shipping relies on the SQL Server Agent running. If the SQL Server Agent is stopped for any reason on the primary or secondary servers the secondary database will fall further behind the primary database, which can potentially lead to data loss or inability to meet you RPO and RTO objectives.

Use log shipping for the following use cases:

Disaster recovery within a data center between servers. You can introduce a delay between when log backups are restored on the secondary server in case of user error.

Disaster recovery between data centers in the case of a data center being unavailable or a disaster happening where the database is lost in the primary data center.

Disaster recovery that has a delay been transactions being made on the primary database and being replayed on the secondary databases. This is not possible with Availability Groups.

Disaster recovery between sites that have a long distance between them, are unreliable, are expensive, or have a long latency.

Offload reporting from the OLTP primary databases. Reports running against the secondary database will no longer cause contention and consume resources on the primary server. The secondary servers can be located closers to the business units.

Configure log shipping

Use SQL Server Management Studio to configure log shipping, because it is much easier than creating the log shipping script yourself. If you want, you can use SQL Server Management Studio to only generate the log shipping configuration script and not configure log shipping itself. You can then review and save the script before executing it.

To practice setting up log shipping set up the following VMs in Hyper-V:

A domain controller (ADDS) for the SQL.LOCAL domain

A SQL Server instance (PRIMARY) joined to SQL.LOCAL

A SQL Server instance (SECONDARY) joined to SQL.LOCAL

A SQL Server instances (database administrator) joined to the domain

This server is optional

It is used to demonstrate the monitor server

You do not have to set up a monitor server

A Windows file server (STORAGE) joined to the domain

This server is optional

It is used for the backup files

You could use a share created on the domain controller instead, or either of the SQL Server instances

The following steps show how to configure log shipping from a primary server to a single secondary server. Users will not have access to the secondary server for reporting purposes.

Open SQL Server Management Studio and connect to the primary SQL Server instance.

Expand the Databases folder.

Right-click on the primary database and click on the Options page.

Make sure the primary database is using the full recovery model.

Click on the Transaction Log Shipping page.

Click on the Enable This As A Primary Database In A Log Shipping configuration.

Click on the Backup Settings button to configure the log shipping backup on the primary server.

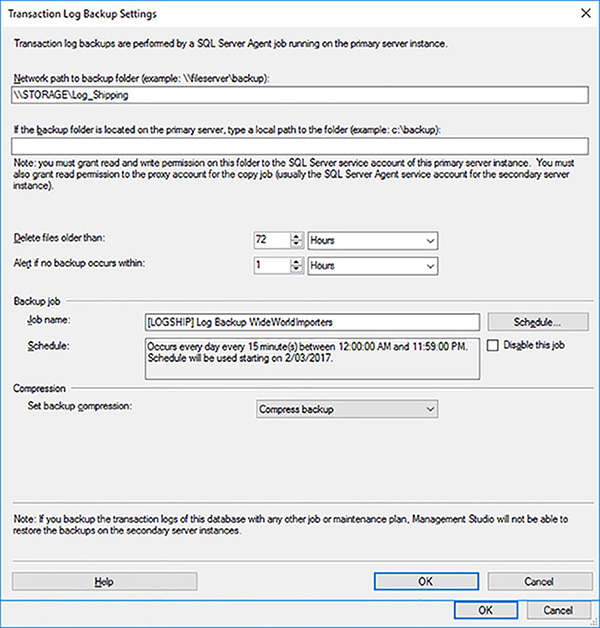

Configure the following transaction log backup settings, as shown in Figure 4-3.

UNC network path to where the log backups will be performed

Optionally, if the log backups will be performed locally, the local path

Duration after which the backup files will be automatically deleted

Duration after which an alert will be generated if backups fail

The backup job name

The database backup compression option

FIGURE 4-3 Enable log shipping for primary database

Click on the Schedule button.

Configure the log backup schedule to occur daily every 15 minutes.

Click on the OK button to close the Transaction Log Backup Settings dialog box.

Click on the Add button to add a secondary server.

Click on the Connect button to authenticate against the secondary server.

Enter the secondary server’s name and click on the Connect button.

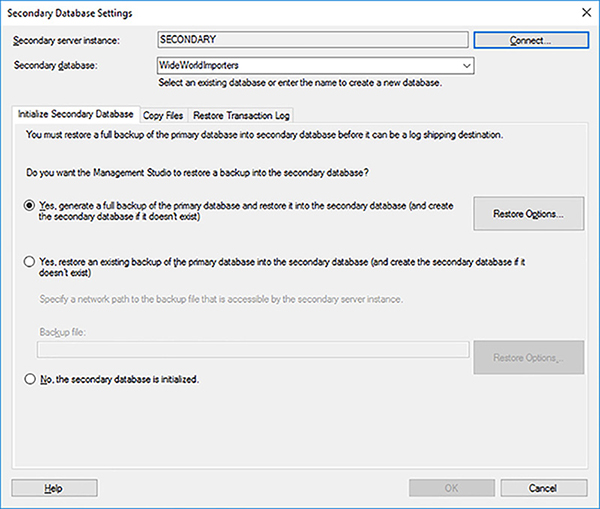

The primary database needs to be initialized on the secondary server before logs can be shipped to it. Configure the following secondary database properties, as shown in Figure 4-4.

Secondary database name.

Generate a full backup of the primary database and restore it on the secondary server.

FIGURE 4-4 Initialize Secondary Database

Click on the Restore Options button.

Configure the secondary database’s data and log paths on the secondary server.

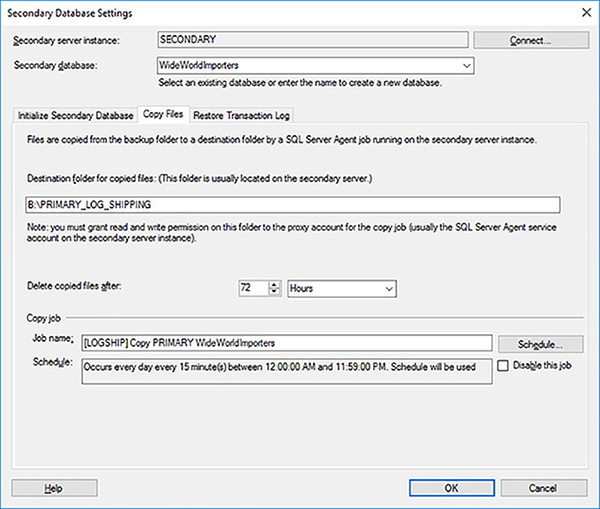

Click on the Copy Files tab.

Configure the following properties of the copy job, as shown in Figure 4-5.

Destination folder for the copied log backup files. You can use a local path on the secondary server or a UNC path.

Duration before the log backup files will be deleted.

Name for the copy job.

A schedule for the copy job, similar to how the schedule for the backup job was configured via the Schedule button.

FIGURE 4-5 Log Shipping Copy Job Properties

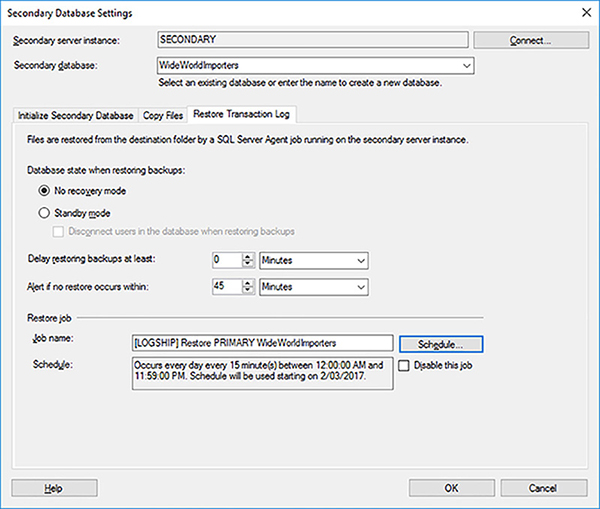

Click on the Restore Transaction Log tab

Configure the following properties of the restore transaction log job, as shown in Figure 4-6, and click on the OK button.

What recovery model the secondary database will remain in after the restore transaction log completes.

With the NORECOVERY recovery model users will not be able to access the secondary database. Subsequent log backups will not be blocked because there are no locks acquired by users within the database.

With the STANDBY recovery model users will be able to use the secondary database in a read-only fashion. However, there is potential for these users to block subsequent restore operations. A restore cannot be performed if users have locks acquired in the secondary database. Check the Disconnect Users In The Database When Restoring Backups check box if you want log shipping to immediately disconnect any users before restoring the log. Users might not be happy!

Whether you want a delay before log backups are restored. This can be very important for protecting against user errors, such as accidental modifications to a table or an accidental table truncation.

Duration before you will be alerted if no restore operation occurs.

Name for the restore transaction log job.

A schedule for the restore transaction log job, similar to how the schedule for the backup job was configured via the Schedule button.

For a data warehouse scenario, you might want to only restore the database once at the end of the day or after midnight. In this case the scheduled restore transaction log job will restore all the log backups required in the correct sequence. Because log shipping keeps track of the history of what has been performed in the [msdb] system database, it is very resilient.

Your backup, copy and restore jobs can run at different frequencies. It is not uncommon to backup and copy the log files at a faster frequency, such as every 15 minutes, than the restore job, that can run hourly, or even once a day.

FIGURE 4-6 Log shipping restore transaction log properties

Click on the Script drop-down list and select the Script Action To A New Query Window option to review and/or save the log shipping configuration to a Transact-SQL script.

Click on the OK button to deploy the log shipping configuration.

Listing 4-1 shows the Transact-SQL script that was generated to configure the log shipping solution.

LISTING 4-1 Log shipping configuration

-- Execute the following statements at the Primary to configure Log Shipping

-- for the database [PRIMARY].[WideWorldImporters],

-- The script needs to be run at the Primary in the context of the [msdb] database.

-------------------------------------------------------------------------------------

-- Adding the Log Shipping configuration

-- ****** Begin: Script to be run at Primary: [PRIMARY] ******

DECLARE @LS_BackupJobId AS uniqueidentifier

DECLARE @LS_PrimaryId AS uniqueidentifier

DECLARE @SP_Add_RetCode As int

EXEC @SP_Add_RetCode = master.dbo.sp_add_log_shipping_primary_database

@database = N'WideWorldImporters'

,@backup_directory = N'\\STORAGE\Log_Shipping'

,@backup_share = N'\\STORAGE\Log_Shipping'

,@backup_job_name = N'[LOGSHIP] Log Backup WideWorldImporters'

,@backup_retention_period = 4320

,@backup_compression = 2

,@backup_threshold = 60

,@threshold_alert_enabled = 1

,@history_retention_period = 5760

,@backup_job_id = @LS_BackupJobId OUTPUT

,@primary_id = @LS_PrimaryId OUTPUT

,@overwrite = 1

IF (@@ERROR = 0 AND @SP_Add_RetCode = 0)

BEGIN

DECLARE @LS_BackUpScheduleUID As uniqueidentifier

DECLARE @LS_BackUpScheduleID AS int

EXEC msdb.dbo.sp_add_schedule

@schedule_name =N'Every 15 minutes'

,@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 15

,@freq_recurrence_factor = 0

,@active_start_date = 20170302 -- Change as appropriate

,@active_end_date = 99991231

,@active_start_time = 0

,@active_end_time = 235900

,@schedule_uid = @LS_BackUpScheduleUID OUTPUT

,@schedule_id = @LS_BackUpScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule

@job_id = @LS_BackupJobId

,@schedule_id = @LS_BackUpScheduleID

EXEC msdb.dbo.sp_update_job

@job_id = @LS_BackupJobId

,@enabled = 1

END

EXEC master.dbo.sp_add_log_shipping_alert_job

EXEC master.dbo.sp_add_log_shipping_primary_secondary

@primary_database = N'WideWorldImporters'

,@secondary_server = N'SECONDARY'

,@secondary_database = N'WideWorldImporters'

,@overwrite = 1

-- ****** End: Script to be run at Primary: [PRIMARY] ******

-- Execute the following statements at the Secondary to configure Log Shipping

-- for the database [SECONDARY].[WideWorldImporters],

-- the script needs to be run at the Secondary in the context of the [msdb] database.

-------------------------------------------------------------------------------------

-- Adding the Log Shipping configuration

-- ****** Begin: Script to be run at Secondary: [SECONDARY] ******

DECLARE @LS_Secondary__CopyJobId AS uniqueidentifier

DECLARE @LS_Secondary__RestoreJobId AS uniqueidentifier

DECLARE @LS_Secondary__SecondaryId AS uniqueidentifier

DECLARE @LS_Add_RetCode As int

EXEC @LS_Add_RetCode = master.dbo.sp_add_log_shipping_secondary_primary

@primary_server = N'PRIMARY'

,@primary_database = N'WideWorldImporters'

,@backup_source_directory = N'\\STORAGE\Log_Shipping'

,@backup_destination_directory = N'B:\PRIMARY_LOG_SHIPPING'

,@copy_job_name = N'[LOGSHIP] Copy PRIMARY WideWorldImporters'

,@restore_job_name = N'[LOGSHIP] Restore PRIMARY WideWorldImporters'

,@file_retention_period = 4320

,@overwrite = 1

,@copy_job_id = @LS_Secondary__CopyJobId OUTPUT

,@restore_job_id = @LS_Secondary__RestoreJobId OUTPUT

,@secondary_id = @LS_Secondary__SecondaryId OUTPUT

IF (@@ERROR = 0 AND @LS_Add_RetCode = 0)

BEGIN

DECLARE @LS_SecondaryCopyJobScheduleUID As uniqueidentifier

DECLARE @LS_SecondaryCopyJobScheduleID AS int

EXEC msdb.dbo.sp_add_schedule

@schedule_name =N'Every 15 minutes'

,@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 15

,@freq_recurrence_factor = 0

,@active_start_date = 20170302 -- Change as appropriate

,@active_end_date = 99991231

,@active_start_time = 0

,@active_end_time = 235900

,@schedule_uid = @LS_SecondaryCopyJobScheduleUID OUTPUT

,@schedule_id = @LS_SecondaryCopyJobScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule

@job_id = @LS_Secondary__CopyJobId

,@schedule_id = @LS_SecondaryCopyJobScheduleID

DECLARE @LS_SecondaryRestoreJobScheduleUID As uniqueidentifier

DECLARE @LS_SecondaryRestoreJobScheduleID AS int

EXEC msdb.dbo.sp_add_schedule

@schedule_name =N'Every 15 minutes'

,@enabled = 1

,@freq_type = 4

,@freq_interval = 1

,@freq_subday_type = 4

,@freq_subday_interval = 15

,@freq_recurrence_factor = 0

,@active_start_date = 20170302 -- Change as appropriate

,@active_end_date = 99991231

,@active_start_time = 0

,@active_end_time = 235900

,@schedule_uid = @LS_SecondaryRestoreJobScheduleUID OUTPUT

,@schedule_id = @LS_SecondaryRestoreJobScheduleID OUTPUT

EXEC msdb.dbo.sp_attach_schedule

@job_id = @LS_Secondary__RestoreJobId

,@schedule_id = @LS_SecondaryRestoreJobScheduleID

END

DECLARE @LS_Add_RetCode2 As int

IF (@@ERROR = 0 AND @LS_Add_RetCode = 0)

BEGIN

EXEC @LS_Add_RetCode2 = master.dbo.sp_add_log_shipping_secondary_database

@secondary_database = N'WideWorldImporters'

,@primary_server = N'PRIMARY'

,@primary_database = N'WideWorldImporters'

,@restore_delay = 0

,@restore_mode = 0

,@disconnect_users = 0

,@restore_threshold = 45

,@threshold_alert_enabled = 1

,@history_retention_period = 5760

,@overwrite = 1

END

IF (@@error = 0 AND @LS_Add_RetCode = 0)

BEGIN

EXEC msdb.dbo.sp_update_job

@job_id = @LS_Secondary__CopyJobId

,@enabled = 1

EXEC msdb.dbo.sp_update_job

@job_id = @LS_Secondary__RestoreJobId

,@enabled = 1

END

-- ****** End: Script to be run at Secondary: [SECONDARY] ******

GO

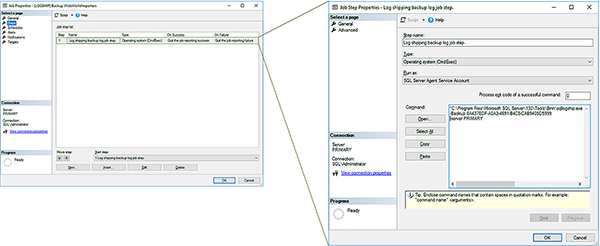

Figure 4-7 shows the log shipping backup job and step created on the primary server. Note how the log shipping back up job does not run any Transact-SQL commands. Instead it invokes the sqllogship.exe agent with a number of parameters. The copy and the backup jobs are also run on the secondary server. If you connect to the secondary server in SQL Server Management Studio, the secondary database is permanently in a restoring state.

FIGURE 4-7 Log shipping backup job on primary server

Because log shipping uses an agent, it is difficult to customize log shipping. That is why it is not uncommon for database administrators to develop and implement their own custom log shipping through Transact-SQL scripts.

The sqllogship.exe agent supports the following parameters:

sqllogship

-server instance_name

{

-backup primary_id |

-copy secondary_id |

-restore secondary_id

}

[ –verboselevel level ]

[ –logintimeout timeout_value ]

[ -querytimeout timeout_value ]

To help troubleshoot log shipping you can change the -verboselevel parameter as required. Table 4-2 shows the different levels supported. The default value used is 3.

TABLE 4-2 Sqllogship.Exe -Verboselevel Parameter Options

Level |

Description |

|---|---|

0 |

Output no tracing and debugging messages |

1 |

Output error-handling messages |

2 |

Output warnings and error-handling messages |

3 |

Output informational messages, warnings, and error-handling messages |

4 |

Output all debugging and tracing messages |

Monitor log shipping

It is important to monitor your log shipping to ensure that log shipping is working as expected, because it could potentially impact your RPO/RTO SLAs. Log shipping allows you to create a separate monitor server that will monitor log shipping jobs on the primary and secondary servers. If a customized threshold expires, an alert will be generated to indicate that a job has failed.

The following steps show how to configure a monitor server for your log shipping solution.

Open SQL Server Management Studio and connect to the primary SQL Server instance.

Expand the Databases folder.

Right-click on the primary database and click on the Transaction Log Shipping page.

Check the Use A Monitor Server Instance check box.

Click on the Settings button to configure the monitor server.

Click on the Connect button to authenticate against the monitoring server.

Provider the server name and authentication details for the monitor server in the Connect to Server dialog box and click the Connect button.

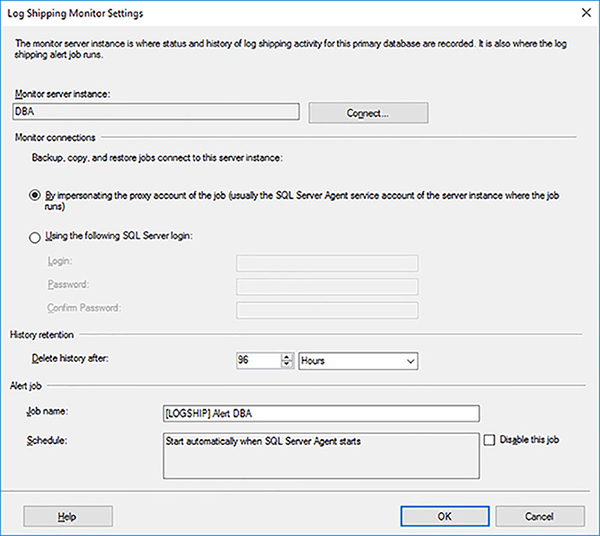

Configure the following details, as shown in Figure 4-8, to configure the monitor server:

Credentials to be used by the monitor server. The best and easiest set up is to impersonate the proxy account of the log shipping jobs.

The history retention after which history will be deleted.

In a production environment, it is not uncommon to configure such information for a number of years.

The name of the alert job.

FIGURE 4-8 Configuring the monitor server settings

Click on the OK button to close the Log Shipping Monitor Settings dialog box.

Click on the OK button for SQL Server Management Studio to configure the log shipping monitor.

Listing 4-2 shows the Transact-SQL script that was generated to configure the log shipping monitor server.

LISTING 4-2 Log shipping configuration.

-- ****** Begin: Script to be run at Monitor: [DBA] ******

EXEC msdb.dbo.sp_processlogshippingmonitorsecondary

@mode = 1

,@secondary_server = N'SECONDARY'

,@secondary_database = N'WideWorldImporters'

,@secondary_id = N''

,@primary_server = N'PRIMARY'

,@primary_database = N'WideWorldImporters'

,@restore_threshold = 45

,@threshold_alert = 14420

,@threshold_alert_enabled = 1

,@history_retention_period = 5760

,@monitor_server = N'DBA'

,@monitor_server_security_mode = 1

-- ****** End: Script to be run at Monitor: [DBA] ******

The log shipping monitor server runs the log shipping alert job. Instead of running an executable, it executes the sys.sp_check_log_shipping_monitor_alert system stored procedure.

With the log shipping monitor configured you can now execute a number of reports to see the current state of log shipping. The log shipping reports will be different depending on whether you execute them from the monitor, the primary, or the secondary log shipping server.

To generate a report, use the following steps:

Open SQL Server Management Studio and connect to the log shipping SQL Server instance.

Right-click on the SQL Server instance that you want to execute the report against.

Select the Reports option.

Select the Standard Reports option.

Click the Transaction Log Shipping Status report.

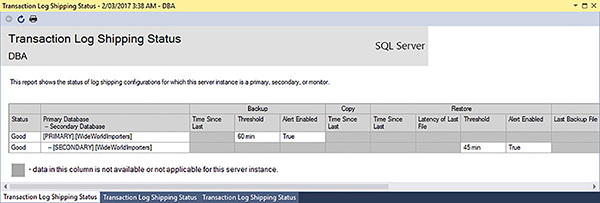

Figure 4-9 shows the Transaction Log Shipping Status report generated on the monitoring server. It shows all the servers in the log shipping configuration.

FIGURE 4-9 Transaction Log Shipping Status report on monitoring server

EXAM TIP

EXAM TIP