Understand Azure data solutions

- By Danilo Dominici, Francesco Milano, Daniel A. Seara

- 10/11/2021

Use cases

One of the best ways to learn a new technology is to read and practice with use cases that mimic the real-world problems you have to solve and to anticipate those you might face in your business. This section analyzes some of the common scenarios for which businesses need to innovate or modernize their infrastructure and that require the skills and competencies of a data engineer to be successfully addressed.

Advanced analytics

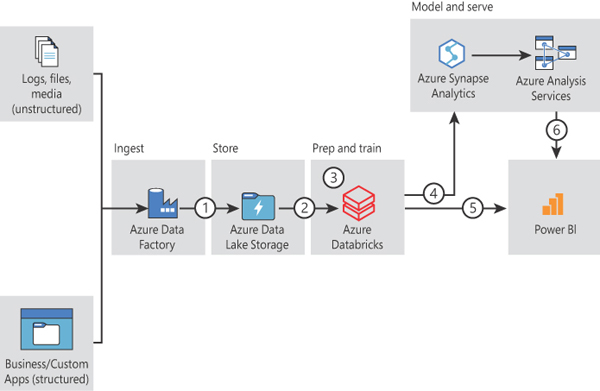

A modern data warehouse lets you bring together all your data in a single place, at any scale, and get insights through analytical dashboards or reports. Figure 1-18 shows the architecture of this data warehouse.

FIGURE 1.18 Advanced analytics architecture diagram

All your structured, semi-structured, and unstructured data (logs, files, and media) can be combined using Azure Data Factory and ported to an Azure Blob storage (or ADLS if you need a hierarchical storage). Then the data is cleaned and transformed using Azure Databricks and stored in Azure Synapse Analytics. From there, it can be presented to users through operational reports or analytical dashboards via Power BI.

Hybrid ETL with existing on-premises SSIS and Azure Data Factory

Many organizations are still in the middle of their digital transformation. They have on-premises data that must be combined and integrated with cloud-based data to obtain insights. Reworking existing ETL processes built with SQL Server Integration Services (SSIS) can result in a migration issue.

To facilitate a “lift-and-shift” migration of an existing SQL Server database and related SSIS packages, a hybrid approach must be used. Azure Data Factory can be employed as the primary orchestration engine, while continuing to access and execute on-premises SSIS packages via an integration runtime (IR). An IR can connect from Azure Data Factory to different on-premises resources such as SQL Server, file systems, and other data sources. It is also possible to execute local SSIS packages.

Several on-premises use cases are listed here:

Preparing data for analytical reporting

Loading sales data into a data warehouse for sales forecasting using ML algorithms

Automating loading of operational data stores or data warehouses for later analysis

Loading network router logs to a database for analysis

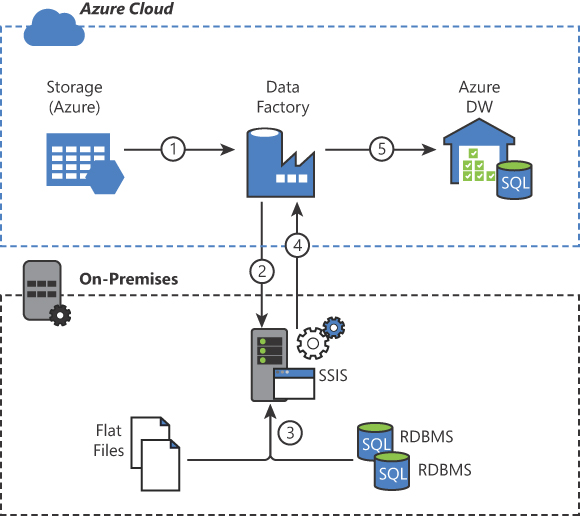

Figure 1-19 summarizes a sample data flow:

Azure Data Factory obtains data from an Azure Blob storage and executes a SSIS package hosted on-premises via IR.

The data-cleansing job processes both the flat files and the SQL Server database to prepare the data.

Data is sent back to Azure Data Factory and loaded into tables in Azure Synapse Analytics.

FIGURE 1.19 Hybrid ETL using ADF and SSIS

Internet of things architecture

Internet of things (IoT) applications connect devices (things) that send data from sensors or events that generates insights. Applications then analyze these insights and generate actions that improve a process or business. For example, an IoT solution might gather data from a telemetry device connected to a car’s telemetry system to extract data such as the engine temperature, RPMs, and GPS information. This could facilitate the notification of the car’s owner when the engine needs a checkup or send an alert in the event of an accident to the driver’s insurance company to enable it to provide immediate assistance.

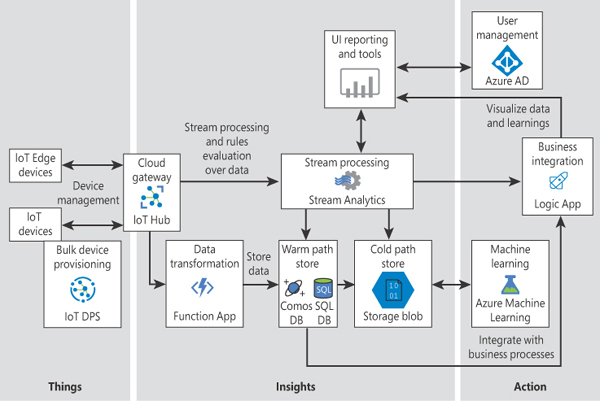

Figure 1-20 shows a reference architecture for IoT solutions, including not only the components required by a lambda or kappa architecture to create hot or cold paths, but also components that guarantee the solution’s security, performance, and analytical capabilities.

FIGURE 1.20 IoT sample reference architecture

The idea here is to ingest data as directly as possible from the physical devices or machines that generate it — for example, a temperature/pressure or counter sensor along an assembly line. IoT Hub helps the ingestion process by providing gateway services, and Azure functions transform the data as needed before it is stored in a Cosmos DB document and/or Azure SQL Database table. Azure Stream Analytics receives processed data from IoT Hub and stores it in a database or storage account, depending on the type of data. Azure Machine Learning evaluates newly added data and stores results in the cold path. Power BI or other tools can be used to extract information from the real-time data, using Active Directory credentials to secure access. To enable the customer to integrate this data with other business applications, you can extract it from multiple sources, such as Cosmos DB, Azure SQL Database, and Azure Stream Analytics, and consequently change data at the destination.