Understand Azure data solutions

- By Danilo Dominici, Francesco Milano, Daniel A. Seara

- 10/11/2021

- Data-storage concepts

- Data-processing concepts

- Use cases

- Summary

- Summary exercise

- Summary exercise answers

Data-processing concepts

Data processing is the conversion of raw data to meaningful information. Depending on the type of data you have and how it is ingested into your system, you can process each data item as it arrives (stream processing) or store it in a buffer for later processing in groups (batch processing).

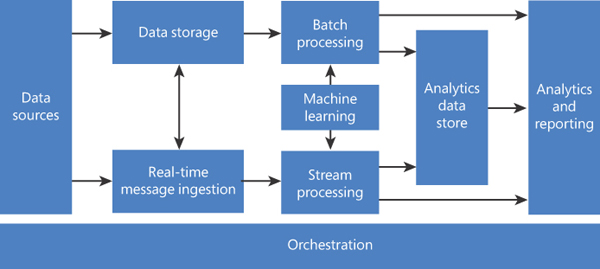

Data processing plays a key role in big data architectures. It’s how raw data is converted into actionable information and delivered to businesses through reports or dashboards. (See Figure 1-12.)

FIGURE 1.12 Big data processing diagram

Batch processing

Batch processing is the processing of a large volume of data all at once. This data might consist of millions of records per day and can be stored in a variety of ways (file, record, and so on). Batch-processing jobs are typically completed simultaneously in non-stop, sequential order. Batch processing might be used to process all the transactions a financial firm submits over the course of a week. It can also be used in payroll processes, line-item invoices, and for supply-chain and fulfillment purposes.

Batch processing is an extremely efficient way to process large amounts of data collected over a period of time. It also helps to reduce labor-related operational costs because it does not require specialized data entry clerks to support its functioning. It can also be used offline, which gives managers complete control over when it starts—for example, overnight or at the end of a week or a pay period.

There are a few disadvantages to using batch-processing software. First, debugging these systems can be tricky. If you don’t have a dedicated IT team or professional to troubleshoot, fixing the system when an error occurs could be difficult, perhaps requiring an outside consultant to assist. Second, although companies usually implement batch-processing software to save money, the software and training involves a decent amount of expense in the beginning. Managers will need to be trained to understand how to schedule a batch, what triggers them, and what certain notifications mean.

Batch processing is used in many scenarios, from simple data transformations to more complex ETL pipelines. Batch processing typically leads to further interactive data exploration and analysis, provides modeling-ready data for machine learning, or writes to a data store that is optimized for analytics and visualization. For example, batch processing might be used to transform a large set of flat, semi-structured CSV or JSON files into a structured format for use in Azure SQL Database, where you can run queries and extract information, or Azure Synapse Analytics, where the objective might be to concentrate data from multiple sources into a single data warehouse.

Stream processing

Stream processing — or real-time processing — enables you to analyze data streaming from one device to another almost instantaneously. This method of continuous computation happens as data flows through the system with no compulsory time limitations on the output. With the almost instant flow, systems do not require large amounts of data to be stored.

Stream processing is highly beneficial if the events you wish to track are happening frequently and close together in time. It is also best to use if the event needs to be detected right away and responded to quickly. Stream processing, then, is useful for tasks like fraud detection and cybersecurity. If transaction data is stream-processed, fraudulent transactions can be identified and stopped before they are even complete. Other use cases include log monitoring and analyzing customer behavior.

One of the biggest challenges with stream processing is that the system’s long-term data output rate must be just as fast or faster than the long-term data input rate. Otherwise the system will begin to have issues with storage and memory. Another challenge is trying to figure out the best way to cope with the huge amount of data that is being generated and moved. To keep the flow of data through the system moving at the most optimal level, organizations must create a plan to reduce the number of copies, to target compute kernels, and to use the cache hierarchy in the best way possible.

Lambda and kappa architectures

Lambda and kappa architectures are the state-of-the-art for workload patterns for handling batch and streaming big data workloads.

Lambda architecture

The key components of the lambda architecture are as follows:

Hot data-processing path This applies to streaming-data workloads.

Cold data-processing path This applies to batch-processed data.

Common serving layer This combines outputs for both paths.

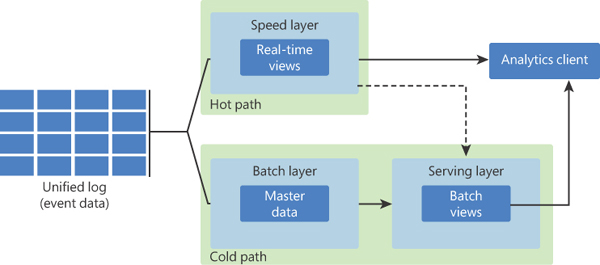

The goal of the architecture is to present a holistic view of an organization’s data, both from history and in near real-time, within a combined serving layer. (See Figure 1-13.)

FIGURE 1.13 Lambda architecture

Data that flows into the hot path is constrained by latency requirements imposed by the speed layer, so it can be processed as quickly as possible (with some loss in terms of accuracy in favor of speed). Data flowing into the cold path, on the other hand, is not subject to the same low-latency requirements. This allows for high-accuracy computation across large data sets, which can be very time-intensive.

Eventually, the hot and cold paths converge at the analytics client application. If the client needs to display timely, yet potentially less-accurate, data in real time, it will acquire its result from the hot path. Otherwise, it will select results from the cold path to display less-timely but more-accurate data.

The raw data stored at the batch layer is immutable. Incoming data is always appended to the existing data, and the previous data is never overwritten. Any changes to the value of a particular datum are stored as a new timestamped event record. This allows for recomputation at any point in time across the history of the data collected. The ability to recompute the batch view from the original raw data is important because it allows for new views to be created as the system evolves.

Distributed systems architects and developers commonly criticize the lambda architecture due to its complexity. A common downside is that code is often replicated in multiple services. Ensuring data quality and code conformity across multiple systems — whether massively parallel processing (MPP) or symmetrically parallel system (SMP) — calls for the same best practice: reproducing code the least number of times possible. (You reproduce code in lambda because different services in MPP systems are better at different tasks.)

Kappa architecture

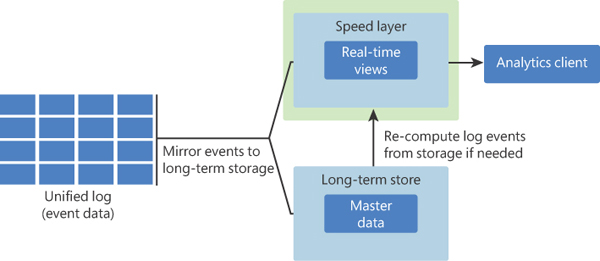

Kappa architecture (see Figure 1-14) proposes an immutable data stream as the primary source of record. This mitigates the need to replicate code in multiple services and solves one of the downsides of the lambda architecture.

FIGURE 1.14 Kappa architecture

Kappa architecture, whose development is attributed to Jay Kreps, CEO of Confluent, Inc. and co-creator of Apache Kafka, proposes an immutable data stream as the primary source of record rather than point-in-time representations of databases or files. In other words, if a data stream containing all organizational data can be persisted indefinitely (or for as long as use cases might require), then changes to the code can be replayed for past events as needed. This allows for unit testing and revisions of streaming calculations that lambda does not support. It also eliminates the need for a batch-based ingress processing, as all data is written as events to the persisted stream.

Azure technologies used for data processing

Microsoft Azure has several technologies to help with both batch and streaming data processing. These include Azure Stream Analytics, Azure Data Factory, and Azure Databricks.

Azure Stream Analytics

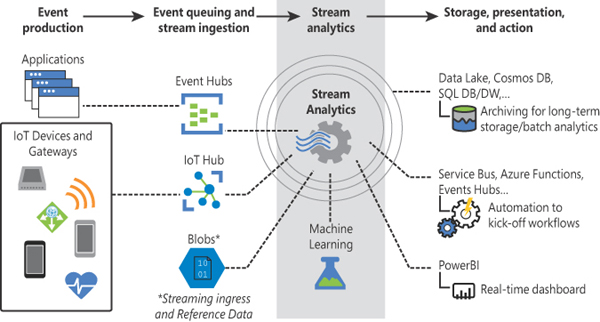

Azure Stream Analytics is a real-time analytics and complex event-processing engine designed to analyze and process high volumes of fast streaming data from multiple sources simultaneously. (See Figure 1-15.)

FIGURE 1.15 Azure Stream Analytics sources and destinations

Azure Stream Analytics can identify patterns and relationships among information extracted from the input sources, including sensors, devices, social media feeds, and trigger actions or initiate workflows.

Some common scenarios in which Azure Stream Analytics can be used are as follows:

Web logs analytics

Geospatial analytics for fleet management and driverless vehicles

Analysis of real-time telemetry streams from IoT devices

Remote monitoring and predictive maintenance of high-value assets

Real-time analytics on point-of-sale data for inventory control and anomaly detection

An Azure Stream Analytics job consists of an input, a query, and an output. The engine can ingest data from Azure Event Hubs (including Azure Event Hubs from Apache Kafka), Azure IoT Hub, and Azure Blob storage. The query, which is based on SQL query language, can be used to easily filter, sort, aggregate, and join streaming data over a period of time.

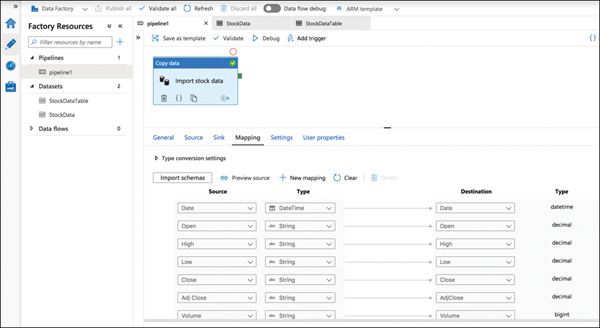

Azure Data Factory

Azure Data Factory (ADF) is a cloud-based ETL and data-integration service that enables you to quickly and efficiently create automated data-driven workflows (called pipelines) to orchestrate data movement and transform data at scale without writing code. These pipelines can ingest data from disparate data stores. Figure 1-16 shows a simple ADF pipeline that imports data from a CSV file reading from Azure Blob storage into an Azure SQL Database.

FIGURE 1.16 Sample ADF pipeline

In a pipeline, you can combine Copy Data activity — used for simple tasks such as copying from one of the many data sources to a destination (called a sink in ADF) — and data flows. You can perform data transformations visually or by using compute services such as Azure HDInsight, Azure Databricks, and Azure SQL Database.

Azure Data Factory includes integration runtime components, which specify where the compute infrastructure that will run an activity gets dispatched from (whether it’s a public or private network). There are three types of integration runtimes:

Azure integration runtimes These can be used to copy or transform data or execute activities between cloud resources.

Self-hosted integration runtimes These are required to integrate cloud and on-premises resources.

Azure SQL Server Integration Services (SSIS) integration runtimes These can be used to execute SSIS packages through Azure Data Factory.

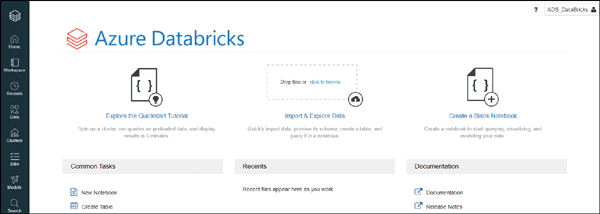

Azure Databricks

Azure Databricks is a fully managed version of the popular open-source Apache Spark analytics and data-processing engine. It provides an enterprise-grade, secure, cloud-based big data and ML platform. Figure 1-17 shows the workspace starting page of a newly created Azure Databricks service, where users can manage clusters, jobs, models, or data (using Jupyter notebooks).

FIGURE 1.17 Azure Databricks workspace starting page

In a big data pipeline, raw or structured data is ingested into Azure through Azure Data Factory in batches or streamed in near real-time using Event Hub, IoT Hub, or Kafka. This data is then stored in Azure Blob storage or ADLS. As part of your analytics workflow, Azure Databricks can read data from multiple data sources — including Azure Blob storage, ADLS, Azure Cosmos DB, and Azure SQL Synapse — and turn it into breakthrough insights using Spark.

Apache Spark is a parallel processing framework that supports in-memory processing to boost the performance of big data analytic applications on massive volumes of data. This means that Apache Spark can help in several scenarios, including the following:

ML Spark contains several libraries, such as MLib, that enable data scientists to perform ML activities. Typically, data scientists would also install Anaconda and use Jupyter notebooks to perform ML activities.

Streaming and real-time analytics Spark has connectors to ingest data in real time from technologies like Kafka.

Interactive data analysis Data engineers and business analysts can use Spark to analyze and prepare data, which can then be used by ML solutions or visualized with tools like Power BI.

Azure Databricks provides enterprise-grade security, including Azure Active Directory integration, role-based controls, and SLAs that protect stored data.

You can connect to Databricks from Excel, R, or Python to perform data analysis. There is also support for third-party BI tools, which can be accessed through JDBC/ODBC cluster endpoints such as Tableau.