Understand Azure data solutions

- By Danilo Dominici, Francesco Milano, Daniel A. Seara

- 10/11/2021

- Data-storage concepts

- Data-processing concepts

- Use cases

- Summary

- Summary exercise

- Summary exercise answers

In this sample chapter from Microsoft Azure Data Solutions - An Introduction, you will review types of data—structured, semi-structured, and unstructured—and their required type of storage.

Data engineers are responsible for data-related implementation tasks. These include the following:

Provisioning data-storage services

Ingesting streaming and batch data

Transforming data

Implementing security requirements

Implementing data-retention policies

Identifying performance bottlenecks

Accessing external data sources

Mastering data engineering requires learning a specific set of tools, architectures, and platforms. Where the SQL professional, for example, might master and use T-SQL as the primary data-manipulation tool, the data engineer may use additional technologies (like HDInsight and Cosmos DB) and languages (such as Python) to manipulate data in big data systems.

Additionally, moving from on-premises to cloud-based infrastructure requires some changes in the way a data engineer uses and manages data. For example, data extraction — a process by which a data engineer retrieves raw data from a structured or unstructured data pool and migrates it to a staging data repository — changes significantly when data processing is done in the cloud. With the traditional extract, transform, and load (ETL) approach, the transformation stage can be time-consuming and potentially tie up source system resources while the process completes. In the cloud, this approach can be changed to extract, load, and transform (ELT), in which data is immediately extracted and loaded into a large data repository such as Azure Cosmos DB or Azure Data Lake Storage to minimize resource contention.

Topics covered in this chapter:

Data-storage concepts

Data-processing concepts

Use cases

Data-storage concepts

The amount of data generated by systems and devices has increased significantly over the last decade. Almost everything we do digitally is recorded, generating an enormous mass of data. New technologies, roles, and approaches to working with this data are constantly emerging. As a data engineer, one of your main tasks is to select the appropriate storage technology to save and recall data quickly, efficiently, and safely.

Types of data

Before analyzing which data-storage technologies are available on Azure, let’s identify the three main categories of data you may have to deal with:

Structured data

Semi-structured data

Unstructured data

Structured data

Structured data — often referred to as relational data — is data that adheres to a strict schema, which defines the field names, data types, and relationship between objects. This is probably the most commonly known type of data.

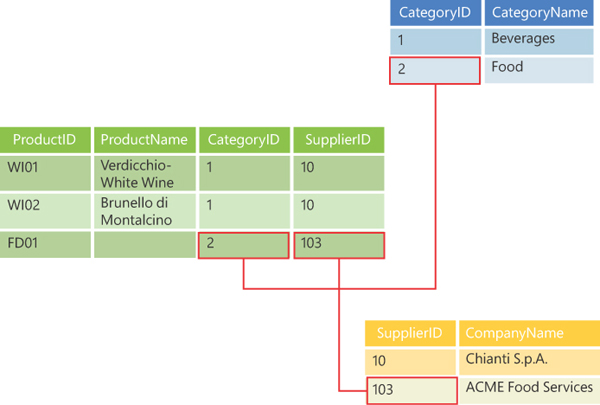

Structured data can be stored in database tables with rows and columns. Key columns may be used — for example, to indicate which column makes a row unique or how the table is related to another table. Figure 1-1 shows an example of a relational database structure.

Structured data is easy to use when you need to query or modify it because all the data follow the same format. But once you force a consistent structure, further evolution of the data can be more difficult because you have to change all existing data to have it conform to the new structure.

Semi-structured data

This is data that is not organized and does not conform to a formal structure of tables, but does have structures — such as tags or metadata — associated with it. This allows records, fields, and hierarchies within the data.

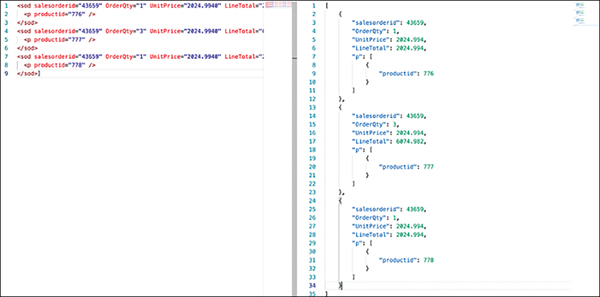

Common examples of semi-structured data are XML, JSON, and YAML documents. Notice that you can have a schema for this data; however, that schema is not strictly enforced as it would be with a structured relational database. The idea here is that this semi-structured data type allows data to be very flexible in terms of adding or removing fields or entire groups of information. Figure 1-2 shows an example of a JSON (left) and XML (right) document.

FIGURE 1.1 An example of a relational database structure

FIGURE 1.2 An example of JSON and XML data

Unstructured data

Unstructured data doesn’t have any kind of structure or predefined data model, and is not organized in any particular manner that allows for traditional analysis. This is the most abundant data type we encounter.

Examples of unstructured data include PDF documents, audio or video files, JPEG images, and text files. All of these may have an internal structure that defines how they are organized internally, but this organization is unknown to the user or to the application.

Understand data storage

Depending on the type of data you are managing, the type of data storage required may differ. For example, if you are using relational data, the most appropriate data storage is a relational database. In contrast, NoSQL data can be stored in a NoSQL database such as Azure Cosmos DB or in Azure Blob storage for documents, images, or other unstructured data files.

Relational databases

Relational databases organize data as a series of two-dimensional tables with rows and columns. Every row in a table has the same set of columns. This model is based on a mathematical concept called relational theory, first described in 1969 by English computer scientist Edgar F. Codd. All data is represented in terms of tuples and grouped into relations. The purpose of the relational model is to provide a declarative method for specifying data and how to query that data. Users can directly interact with the database management system, which takes care of storing and retrieving data, to read the information they want from it.

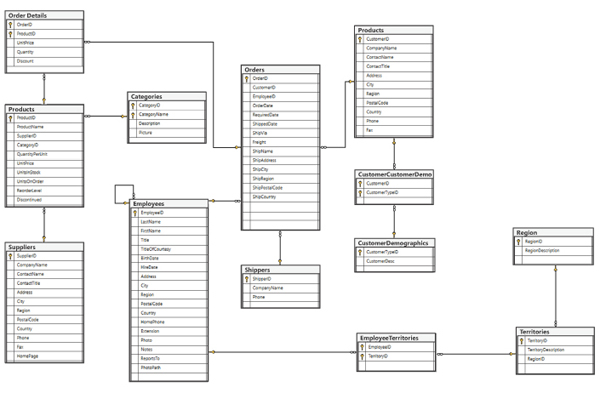

Most vendors provide a dialect of the Structured Query Language (SQL) for retrieving and managing data. A relational database management system (RDBMS) typically implements a transactionally consistent mechanism that conforms to the atomic, consistent, isolated, durable (ACID) model for updating information. Figure 1-3 shows an example of a relational database diagram.

FIGURE 1.3 A diagram of a famous sample relational database called the Northwind database

An RDBMS is very useful when strong consistency guarantees are important — where all changes are atomic and transactions always leave the data in a consistent state. So, information stored in an RDBMS must be put into a relational structure by following the normalization process. While this process is well understood, it can lead to inefficiencies, because of the need to disassemble logical entities into rows in separate tables and then reassemble the data when running queries. Furthermore, distributing storage and processing across machines is not possible with a traditional RDBMS.

Non-relational (or NoSQL) databases

A non-relational database does not use the tabular schema of rows and columns found in a traditional RDBMS. Instead, these databases use a storage model that is optimized for the specific requirements of the type of data being stored.

Typical data stores in non-relational databases are key/value pairs, JSON documents, or graphs consisting of edges and vertices/nodes. What these data stores have in common is that they don’t use a relational model. Instead, they tend to be more specific in the type of data they support and how that data can be queried.

Document data store

A document data store is used to manage a set of named strings and object data values in an entity called a document — typically a JSON document. Each field value can be a scalar item, like a number or a string, or a compound element, such as a list or a parent-child collection.

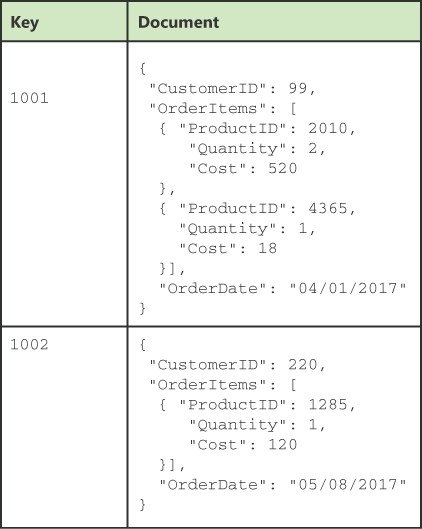

Figure 1-4 shows an example of a JSON document with scalar values (CustomerID) and item collections (OrderItems), each one itself made by scalar values.

FIGURE 1.4 An example of a JSON document

This is one of the principal differences from a relational table: a JSON document can contain all the information about the entity it refers to, while a relational database must spread that data across several tables. Moreover, a JSON document does not require that all documents have the same structure, providing a high level of flexibility.

In the example shown in Figure 1-4, applications can retrieve data by using the document key, which is the unique identifier of the document. To help distribute data evenly, the document key can be hashed and can be automatically generated by the document database itself. The application can also query the documents based on the value of one or more fields. Some document databases support indexing to facilitate fast lookup of documents based on one or more indexed fields. Many document data stores support in-place updates, enabling the application to modify specific fields of the document without rewriting the entire document.

Columnar data store

Where a relational database is optimized for storing rows of data, typically for transactional applications, a columnar database is optimized for fast retrieval of columns of data, typically in analytical applications. Column-oriented storage for database tables is an important factor in analytic query performance because it reduces the overall disk I/O requirements and the amount of data you need to load from disk.

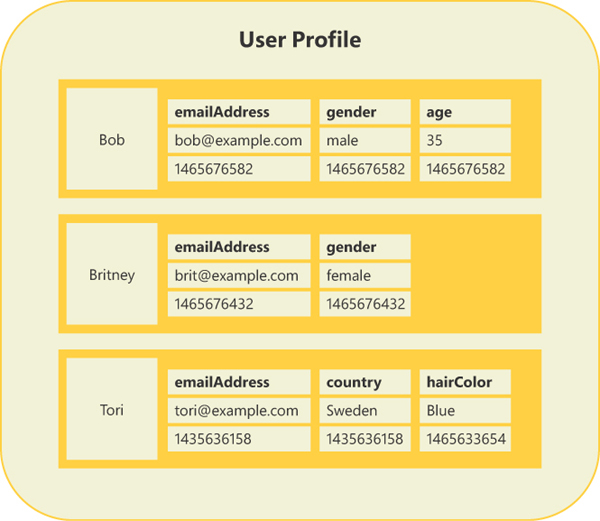

Columns are divided into groups called column families. Each column family holds a set of columns that are logically related and are typically retrieved or manipulated as a unit. Data that is accessed separately can be stored in separate column families. This enables you to add new columns dynamically, even if rows are sparse — that is, a row doesn’t need to have a value for every column. Figure 1-5 shows an example of a column family.

Key/Value data store

A key/value store is essentially a large hash table. You associate each data value with a unique key, and the key/value store uses this key to store the data by using an appropriate hashing function. The hashing function is selected to provide an even distribution of hashed keys across the data storage.

Most key/value stores support only simple query, insert, and delete operations. To modify a value (either partially or completely), an application must overwrite the existing data for the entire value. In most implementations, reading or writing a single value is an atomic operation. If the value is large, writing may take some time.

An application can store arbitrary data as a set of values, although some key/value stores impose limits on the maximum size of values. The stored values are opaque to the storage system software. Any schema information must be provided and interpreted by the application. Essentially, values are blobs, and the key/value store simply retrieves or stores the value by key.

FIGURE 1.5 Example of a column family

Graph data stores

A graph data store manages two types of information:

Nodes These represent entities.

Edges These specify the relationships between entities.

Similar to a relational table, nodes and edges can have properties that provide information about that node or edge or a direction indicating the nature of the relationship.

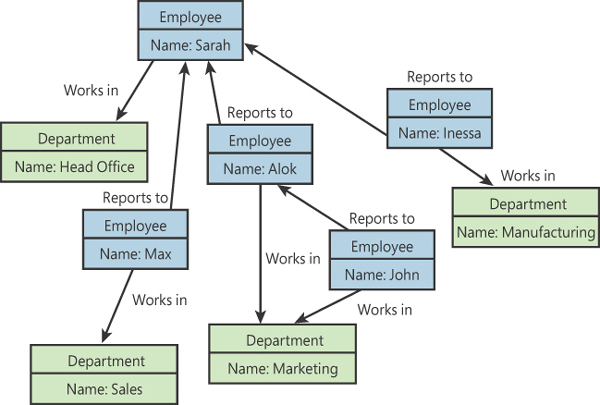

The purpose of a graph data store is to enable an application to efficiently perform queries that traverse the network of nodes and edges and to analyze the relationships between entities. The diagram in Figure 1-6 shows an organization’s personnel data structured as a graph. The entities are employees and departments; the edges indicate reporting relationships and the department in which the employees work. In this graph, the arrows on the edges show the direction of the relationships.

FIGURE 1.6 Example of a graph

Data storage in Azure

Now that you are more familiar with the various data types and their storage types, let’s take a look at what data storage technologies are available on Azure and when to use them to properly store your data.

Azure storage account

An Azure storage account is the base storage type used within Microsoft Azure. It offers a massively scalable object store for data objects and file system services, a messaging store for reliable messaging, and a NoSQL table store.

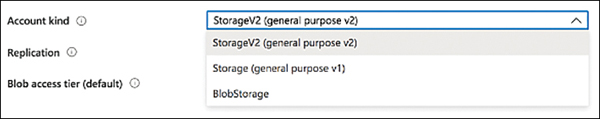

As shown in Figure 1-7, which depicts the account type drop-down list on the Azure Portal storage account creation form, when creating a new storage account, you can choose between blob storage, which can contain only simple unstructured data such as images or videos, and two generations of Data Lake Storage.

FIGURE 1.7 Azure Portal storage account creation form

Azure Blob storage

Azure Blob storage is Microsoft’s object storage solution for the cloud. It is optimized for storing massive amounts of unstructured data.

Blob storage is designed for:

Serving images or documents directly to a browser

Storing files for distributed access

Streaming video and audio

Storing data for backup and restore, disaster recovery, and archiving

Storing data for analysis by an on-premises or Azure-hosted service

Writing to log files

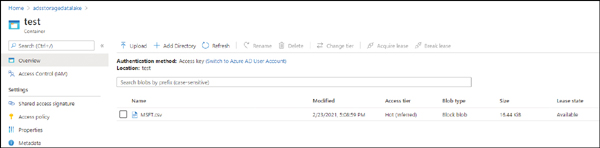

Blob storage can store block blobs and append blobs into containers, which are the logical space where data is organized. Figure 1-8 shows an Azure blob storage container, named test, which contains a CSV file named MSFT.csv.

FIGURE 1.8 Azure blob storage container

Azure storage provides several options for accessing data, based on their usage patterns. These options are called access tier. There are three access tiers:

Hot access tier This access tier is optimized for frequent access in the storage account and is the default option for new Azure Blob storage accounts.

Cool access tier This tier is optimized for large amounts of data that are infrequently accessed and are stored for at least 30 days. Accessing the cool access tier can be more expensive than accessing data in the hot tier.

Archive access tier This tier is available only for block blobs and is optimized for data that can tolerate a high latency in retrieving data (several hours) and that can remain in this tier for at least 180 days.

Like other Azure storage technologies, Azure Blob storage employs redundancy options, which define where and how data is replicated. These options include the following:

Locally redundant storage (LRS) Data is copied synchronously three times within the primary region.

Zone-redundant storage (ZRS) Data is copied synchronously across three Azure availability zones in the primary region.

Geo-redundant storage (GRS) Data is copied synchronously three times in the primary region, then copied to the secondary region. To access data in the secondary region, the read-access geo-redundant storage (RA-GRS) option must be enabled.

Geo-zone-redundant storage (GZRS) Data is copied synchronously across three Azure availability zones in the primary region, then copied asynchronously to the secondary region. The RA-GRS option must be enabled to access data in the secondary region.

Once data is stored into containers, users or client applications can access objects in Azure Blob storage via HTTP/HTTPS from anywhere in the world using a broad range of access methods. These include the following:

The Azure storage REST API

Azure PowerShell

Azure CLI

An Azure storage client library

There are also a lot of client libraries that developers can use to interact with Azure blob storage from within their applications using several common languages, such as the following:

.NET

Java

Node.js

Python

Go

PHP

Ruby

Azure Data Lake Storage Gen1

Azure Data Lake Storage Gen1 (ADLS Gen1) is an enterprise-wide hyper-scale repository for big data analytic workloads. ADLS Gen1 is an Apache Hadoop file system that is compatible with Hadoop Distributed File System (HDFS) and works with the Hadoop ecosystem.

ADLS Gen1 provides unlimited storage and can store any data in its native format, without requiring prior transformation. It uses Azure Active Directory for authentication and access control lists (ACLs) to manage secure access to data.

Azure Data Lake Storage Gen2

Azure Data Lake Storage Gen2 (ADLS Gen2) is a set of capabilities dedicated to big data analytics built on top of Azure Blob storage. Combining the features of two existing services, Azure Blob storage and ADLS Gen1, this new generation of ADLS lets you use capabilities like file system semantics, directory and file level security, high availability/disaster recovery.

Key features of ADLS Gen2 are as follows:

Hierarchical namespace Objects and files are organized into a hierarchy of directories for efficient data access and better performance. Slashes are used in the name to mimic a hierarchical directory structure.

Hadoop-compatible access You can treat data as if it’s stored in an HDFS. With this feature, you can store the data in one place and access it through compute technologies including Azure Databricks, Azure HDInsight, and Azure Synapse Analytics without moving the data between environments.

Security ADLS Gen2 supports ACLs and Portable Operating System Interface (POSIX) permissions. You can set permissions at the directory or file level for data stored within the data lake. This security is configurable through technologies such as Apache Hive and Adobe Spark, and through utilities such as Azure Storage Explorer. All stored data is encrypted at rest using either Microsoft- or customer-managed keys.

Low cost ADLS Gen2 offers low-cost storage capacity and transactions.

Optimized performance The Azure Blob Filesystem (ABFS) driver is optimized specifically for big data analytics and corresponding REST APIs are surfaced through dfs.core.windows.net. Moreover, ADSL Gen2 organizes stored data into a hierarchy of directories and subdirectories, much like a file system, for easier navigation. As a result, data processing requires less computational resources, reducing both time and cost.

Data redundancy ADLS Gen2 takes advantage of Azure Blob replication models that provide data redundancy in a single data center with locally redundant storage (LRS), or to a secondary region using geo-redundant (GRS) storage. This feature ensures that your data is always available and protected if catastrophe strikes.

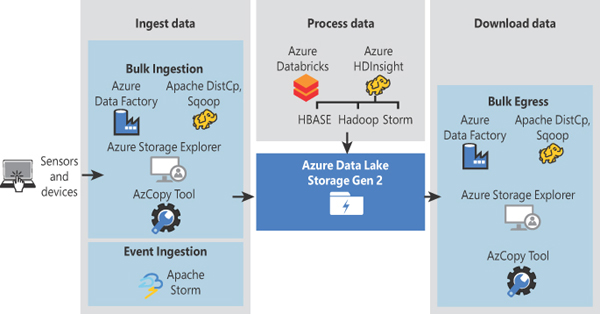

Depending on the source data, you can use several tools to ingest data into ADLS Gen2, including Azure Data Factory, Apache DistCp, Apache Sqoop, Azure Storage Explorer, and AzCopy. You can also ingest events using Apache Storm. (See Figure 1-9.)

FIGURE 1.9 ADLS Gen2 ingestion tools

You can use REST APIs to interact with ADLS Gen2 programmatically. To visualize data, you can use Power BI, which can access ADLS Gen2 directly. Query acceleration is a new capability that enables applications and analytics frameworks to dramatically optimize data processing by retrieving only the data they require to perform a given operation. This reduces the time and processing power needed to gain critical insights into stored data.

Azure SQL Database

Azure SQL Database is a fully managed platform as a service (PaaS) database engine designed for cloud applications when near-zero administration and enterprise-grade capabilities are needed. Azure SQL Database is always running on the last stable version of the SQL Server database engine and patched OS, with 99.999% availability. In fact, the newest capabilities of SQL Server are released first to Azure SQL Database, and then to SQL Server itself. Azure SQL Database also enables the use of advanced query processing features such as high-performance in-memory technologies and intelligent query processing.

There are three deployment options for Azure SQL Database:

Single database Azure SQL Database is a fully managed platform as a service (PaaS) database engine that handles most database-management functions. This flavor represents a fully managed, isolated database. It is similar to the contained database in the SQL Server database engine and is perfect for cloud applications or microservices that need a single data source.

Elastic pool This is a collection of single databases that have varying and unpredictable usage demands. This deployment option can be used to lower costs when managing multiple databases by sharing resources like CPU and memory at a set price.

Managed Instance This is a fully managed database that has near 100% compatibility with the latest SQL Server Enterprise Edition database engine. It allows existing SQL Server customers to lift and shift their on-premises applications to the cloud with minimal application and database changes, while preserving all PaaS capabilities. These include automatic patching and version updates, automated backups, and high availability, which drastically reduce management overhead.

Azure SQL Database (except Managed Instances) can be purchased as two different models:

Database transaction unit (DTU) A DTU represents a blended measure of CPU, memory, reads, and writes. The DTU-based purchasing model offers a set of preconfigured bundles of compute resources and storage to drive different levels of application performance. Having a fixed monthly payment for the selected bundle allows for a simpler way to choose from among the various offers. In the DTU-based purchasing model, you can choose between the basic, standard, and premium service tiers for Azure SQL Database, each with different resources limitations. The DTU-based purchasing model isn’t available for Azure SQL Managed Instance.

vCore Compared to the DTU-based purchasing model, this purchasing model provides several advantages, including higher compute, memory, I/O, and storage limits, as well as greater availability of hardware generation models that better match the resource requirements of the workload. This model enables you to focus on critical domain-specific database administration and optimization activities.

Azure SQL Managed Instance

Azure SQL Managed Instance (SQL MI) is a cloud database service that combines the broadest SQL Server database engine compatibility with all the benefits of a fully managed PaaS model.

SQL MI has close to 100% compatibility with the latest SQL Server (Enterprise Edition) database engine, providing a native Azure Virtual Network (VNet) implementation that addresses common security concerns. At the same time, SQL MI preserves all PaaS capabilities — such as automatic patching and version updates, automated backups, and high availability — that drastically reduce management overhead and total cost of ownership (TCO).

Azure Synapse Analytics

Azure Synapse Analytics — formerly known as Azure SQL Data Warehouse — is an analytics service that brings together enterprise data warehousing and big data analytics. The latest version combines these two realms with a unified experience to ingest, prepare, manage, and serve data for immediate business intelligence (BI) and machine learning (ML) needs.

Azure Synapse Analytics consists of four components:

Synapse SQL This is a complete analytics service based on the T-SQL language and supports SQL pools and SQL on-demand.

Apache Spark This is an open-source unified analytics engine for large-scale data processing.

Synapse pipelines These allow for hybrid data integration.

Synapse Studio This is the core tool used to administer and operate different features of Azure SQL Analytics.

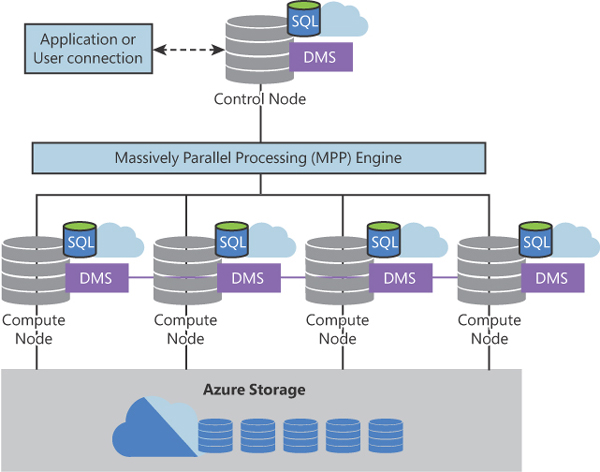

Synapse SQL leverages a scale-out architecture to distribute computational processing of data across multiple nodes. The unit of scale is an abstraction of compute power called a data warehouse unit, which is calculated using CPU, memory, and I/O values. Compute is separate from storage, which enables you to scale compute independently of the data in your system.

Synapse offers various types of pools, which you can create within a workspace to manage data:

Dedicated SQL pool (formerly SQL DW) A collection of analytic resources provisioned when you use Azure Synapse SQL. The size of a dedicated SQL pool is predetermined by the assignment of Data Warehouse Units (DWUs).

Serverless SQL pool A serverless query service that enables you to run SQL queries on files placed in Azure storage.

Serverless Spark pool An in-memory distributed processing framework for big data analytics.

Figure 1-10 shows a logical architecture diagram of Synapse SQL pool. It involves the following:

Massively parallel processing (MPP) This is the core operation that underlies the pool. MPP uses many CPUs to run the workload in parallel when executing queries. Each CPU has its own memory and disk.

Control nodes These are the worker bees in a Synapse SQL pool. They receive queries from applications and maintain communications among all the nodes.

Data Movement Service (DMS) This moves data around the nodes whenever needed and enables parallelism in operations among compute nodes like queries or data loading.

FIGURE 1.10 Azure Synapse Analytics pool architecture

Azure Cosmos DB

Azure Cosmos DB is Microsoft’s globally distributed, multi-model database service. It is built around some key concepts:

It must be highly responsive.

It must be always online.

It must be able to elastically and independently scale throughput and storage across any number of Azure regions worldwide.

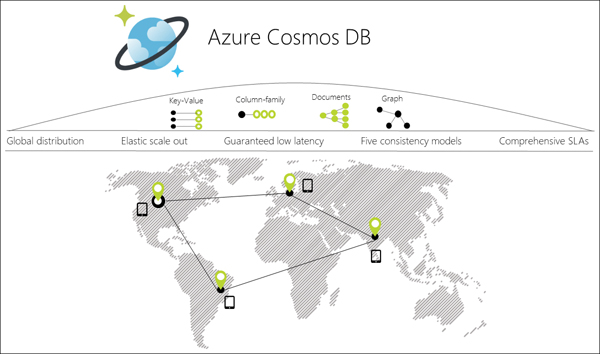

Figure 1-11 provides an overview of Azure Cosmos DB features.

FIGURE 1.11 Azure Cosmos DB features overview

One key concept in Cosmos DB is data distribution. You can configure Cosmos DB databases to be globally distributed and available in any of the Azure regions. Putting the applications in the same region enables you to achieve minimum latency and maximum speed to access data wherever it is. Which region you choose depends on the global reach of your application and where your users are located. Cosmos DB transparently replicates the data to all the regions associated with your Cosmos account. It provides a single system image of your globally distributed Azure Cosmos database and containers that your application can read and write to locally.

With Azure Cosmos DB, you can add or remove regions associated with your account at any time. The application need not be paused or redeployed to add or remove a region. It continues to be highly available at all times because of the multi-homing capabilities that the service natively provides.

Azure Cosmos DB can be deployed using several API models, including the following:

SQL API

MongoDB API

Cassandra DB API

Gremlin DB API

Table API

This multi-model architecture enables the database engineer to leverage the inherent capabilities of each model such as MongoDB for semi-structured data, Cassandra for columnar storage, or Gremlin for graph databases. Using Gremlin, for example, the data engineer could create graph entities and perform graph query operations to perform traversals across vertices and edges, achieving sub-second response time for complex scenarios like natural language processing (NLP) or social networking associations.

Applications built on SQL, MongoDB, or Cassandra will continue to operate without, or with minimal, changes to the application despite the database server being moved from SQL, MongoDB, or Cassandra to Azure Cosmos DB.

Azure Cosmos DB also guarantees unparalleled speed, with less than 10 ms latency for reads (indexed) and writes in every Azure region worldwide. In addition, multi-region writes and data distribution to any Azure region simplify the creation of worldwide cloud applications that need to access data within the boundary of the region to which they are deployed.