T-SQL Querying: TOP and OFFSET-FETCH

- By Itzik Ben-Gan, Kevin Farlee, Adam Machanic, Dejan Sarka

- 3/11/2015

Top N per group

The top N per group task is a classic task that appears in many shapes in practice. Examples include the following: “Return the latest price for each security,” “Return the employee who handled the most orders for each region,” “Return the three most recent orders for each customer,” and so on. Interestingly, like with many other examples in T-SQL, it’s not like there’s one solution that is considered the most efficient in all cases. Different solutions work best in different circumstances. For top N per group tasks, two main factors determine which solution is most efficient: the availability of a supporting index and the density of the partitioning (group) column.

The task I will use to demonstrate the different solutions is returning the three most recent orders for each customer from the Sales.Orders table in the TSQLV3 database. In any top N per group task, you need to identify the elements involved: partitioning, ordering, and covering. The partitioning element defines the groups. The ordering element defines the order—based on which, you filter the first N rows in each group. The covering element simply represents the rest of the columns you need to return. Here are the elements in our sample task:

- Partitioning: custid

- Ordering: orderdate DESC, orderid DESC

- Covering: empid

As mentioned, one of the important factors contributing to the efficiency of solutions is the availability of a supporting index. The recommended index is based on a pattern I like to think of as POC—the acronym for the elements involved (partitioning, ordering, and covering). The PO elements should form the index key list, and the C element should form the index include list. If the index is clustered, only the key list is relevant; all the rest of the columns are included in the leaf row, anyway. Run the following code to create the POC index for our sample task:

USE TSQLV3; CREATE UNIQUE INDEX idx_poc ON Sales.Orders(custid, orderdate DESC, orderid DESC) INCLUDE(empid);

The other important factor in determining which solution is most efficient is the density of the partitioning element (custid, in our case). The Sales.Orders table in our example is very small, but imagine the same structure with a larger volume of data—say, 10,000,000 rows. The row size in our index is quite small (22 bytes), so over 300 rows fit in a page. This means the index will have about 30,000 pages in its leaf level and will be three levels deep. I’ll discuss two density scenarios in my examples:

- Low density: 1,000,000 customers, with 10 orders per customer on average

- High density: 10 customers, with 1,000,000 orders per customer on average

I’ll start with a solution based on the ROW_NUMBER function that is the most efficient in the low-density case. I’ll continue with a solution based on TOP and APPLY that is the most efficient in the high-density case. Finally, I’ll describe a solution based on concatenation that performs better than the others when a POC index is not available, regardless of density.

Solution using ROW_NUMBER

Two main optimization strategies can be used to carry out our task. One strategy is to perform a seek for each customer in the POC index to the beginning of that customer’s section in the index leaf, and then perform a range scan of the three qualifying rows. Another strategy is to perform a single scan of the index leaf and then filter the interesting rows as part of the scan.

The former strategy is not efficient for low density because it involves a large number of seeks. For 1,000,000 customers, it requires 1,000,000 seeks. With three levels in the index, this approach translates to 3,000,000 random reads. Therefore, with low density, the strategy involving a single full scan and a filter is more efficient. From an I/O perspective, it should cost about 30,000 sequential reads.

To achieve the more efficient strategy for low density, you use the ROW_NUMBER function. You write a query that computes row numbers that are partitioned by custid and ordered by orderdate DESC, orderid DESC. This query is optimized with a single ordered scan of the POC index, as desired. You then define a CTE based on this query and, in the outer query, filter the rows with a row number that is less than or equal to 3. This part adds a Filter operator to the plan. Here’s the complete solution:

WITH C AS

(

SELECT

ROW_NUMBER() OVER(

PARTITION BY custid

ORDER BY orderdate DESC, orderid DESC) AS rownum,

orderid, orderdate, custid, empid

FROM Sales.Orders

)

SELECT custid, orderdate, orderid, empid

FROM C

WHERE rownum <= 3;

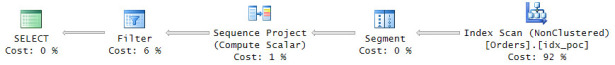

The execution plan for this solution is shown in Figure 5-9.

FIGURE 5-9 Plan for a solution with ROW_NUMBER.

As you can see, the majority of the cost of this plan is associated with the ordered scan of the POC index. As mentioned, if the table had 10,000,000 rows, the I/O cost would be about 30,000 sequential reads.

Solution using TOP and APPLY

If you have high density (10 customers, with 1,000,000 rows each), the strategy with the index scan is not the most efficient. With a small number of partitions (customers), a plan that performs a seek in the POC index for each partition is much more efficient.

If only a single customer is involved in the task, you can achieve a plan with a seek by using the TOP filter, like so:

SELECT TOP (3) orderid, orderdate, empid FROM Sales.Orders WHERE custid = 1 ORDER BY orderdate DESC, orderid DESC;

To apply this logic to each customer, use the APPLY operator with the preceding query against the Customers table, like so:

SELECT C.custid, A.orderid, A.orderdate, A.empid

FROM Sales.Customers AS C

CROSS APPLY ( SELECT TOP (3) orderid, orderdate, empid

FROM Sales.Orders AS O

WHERE O.custid = C.custid

ORDER BY orderdate DESC, orderid DESC ) AS A;

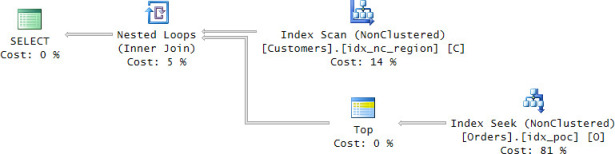

The execution plan for this solution is shown in Figure 5-10.

FIGURE 5-10 Plan for a solution with TOP and APPLY.

You get the desired plan for high density. With only 10 customers, this plan requires about 30 logical reads. That’s big savings compared to the cost of the scan strategy, which is 30,000 reads.

Solution using concatenation (a carry-along sort)

Absent a POC index, both solutions I just described become inefficient and have problems scaling. The solution based on the ROW_NUMBER function will require sorting. Sorting has n log n scaling, becoming more expensive per row as the number of rows increases. The solution with the TOP filter and the APPLY operator might require an Index Spool operator (which involves the creation of an index during the plan) and sorting.

Interestingly, when N equals 1 in the top N per group task and a POC index is absent, there’s a third solution that performs and scales better than the other two.

Make sure you drop the POC index before proceeding:

DROP INDEX idx_poc ON Sales.Orders;

The third solution is based on the concatenation of elements. It implements a technique you can think of as a carry-along sort. You start by writing a grouped query that groups the rows by the P element (custid). In the SELECT list, you convert the O (orderdate DESC, orderid DESC) and C (empid) elements to strings and concatenate them into one string. What’s important here is to convert the original values into string forms that preserve the original ordering behavior of the values. For example, use leading zeros for integers, use the form YYYYMMDD for dates, and so on. It’s only important to preserve ordering behavior for the O element to filter the right rows. The C element should be added just to return it in the output. You apply the MAX aggregate to the concatenated string. This results in returning one row per customer, with a concatenated string holding the elements from the most recent order. Finally, you define a CTE based on the grouped query, and in the outer query you extract the individual columns from the concatenated string and convert them back to the original types. Here’s the complete solution query:

WITH C AS

(

SELECT

custid,

MAX( (CONVERT(CHAR(8), orderdate, 112)

+ RIGHT('000000000' + CAST(orderid AS VARCHAR(10)), 10)

+ CAST(empid AS CHAR(10)) ) COLLATE Latin1_General_BIN2 ) AS s

FROM Sales.Orders

GROUP BY custid

)

SELECT custid,

CAST( SUBSTRING(s, 1, 8) AS DATE ) AS orderdate,

CAST( SUBSTRING(s, 9, 10) AS INT ) AS orderid,

CAST( SUBSTRING(s, 19, 10) AS CHAR(10) ) AS empid

FROM C;

What’s nice about this solution is that it scales much better than the others. With a small input table, the optimizer usually sorts the data and then uses an order-based aggregate (the Stream Aggregate operator). But with a large input table, the optimizer usually uses parallelism, with a local hash-based aggregate for each thread doing the bulk of the work, and a global aggregate that aggregates the local ones. You can see this approach by running the carry-along-sort solution against the Orders table in the PerformanceV3 database:

USE PerformanceV3;

WITH C AS

(

SELECT

custid,

MAX( (CONVERT(CHAR(8), orderdate, 112)

+ RIGHT('000000000' + CAST(orderid AS VARCHAR(10)), 10)

+ CAST(empid AS CHAR(10)) ) COLLATE Latin1_General_BIN2 ) AS s

FROM dbo.Orders

GROUP BY custid

)

SELECT custid,

CAST( SUBSTRING(s, 1, 8) AS DATE ) AS orderdate,

CAST( SUBSTRING(s, 9, 10) AS INT ) AS orderid,

CAST( SUBSTRING(s, 19, 10) AS CHAR(10) ) AS empid

FROM C;

The execution plan for this query is shown in Figure 5-11.

FIGURE 5-11 Plan for a solution using concatenation.

This exercise emphasizes again that there are usually multiple ways to solve any given querying task, and it’s not like one of the solutions is optimal in all cases. In query tuning, different factors are at play, and under different conditions different solutions are optimal.