The Managed Heap and Garbage Collection in the CLR

- By Jeffrey Richter

- 11/15/2012

- Managed Heap Basics

- Generations: Improving Performance

- Working with Types Requiring Special Cleanup

- Monitoring and Controlling the Lifetime of Objects Manually

Working with Types Requiring Special Cleanup

At this point, you should have a basic understanding of garbage collection and the managed heap, including how the garbage collector reclaims an object’s memory. Fortunately for us, most types need only memory to operate. However, some types require more than just memory to be useful; some types require the use of a native resource in addition to memory.

The System.IO.FileStream type, for example, needs to open a file (a native resource) and store the file’s handle. Then the type’s Read and Write methods use this handle to manipulate the file. Similarly, the System.Threading.Mutex type opens a Windows mutex kernel object (a native resource) and stores its handle, using it when the Mutex’s methods are called.

If a type wrapping a native resource gets GC’d, the GC will reclaim the memory used by the object in the managed heap; but the native resource, which the GC doesn’t know anything about, will be leaked. This is clearly not desirable, so the CLR offers a mechanism called finalization. Finalization allows an object to execute some code after the object has been determined to be garbage but before the object’s memory is reclaimed from the managed heap. All types that wrap a native resource—such as a file, network connection, socket, or mutex—support finalization. When the CLR determines that one of these objects is no longer reachable, the object gets to finalize itself, releasing the native resource it wraps, and then, later, the GC will reclaim the object from the managed heap.

System.Object, the base class of everything, defines a protected and virtual method called Finalize. When the garbage collector determines that an object is garbage, it calls the object’s Finalize method (if it is overridden). Microsoft’s C# team felt that Finalize methods were a special kind of method requiring special syntax in the programming language (similar to how C# requires special syntax to define a constructor). So, in C#, you must define a Finalize method by placing a tilde symbol (~) in front of the class name, as shown in the following code sample.

internal sealed class SomeType {

// This is the Finalize method

~SomeType() {

// The code here is inside the Finalize method

}

}

If you were to compile this code and examine the resulting assembly with ILDasm.exe, you’d see that the C# compiler did, in fact, emit a protected override method named Finalize into the module’s metadata. If you examined the Finalize method’s IL code, you’d also see that the code inside the method’s body is emitted into a try block, and that a call to base.Finalize is emitted into a finally block.

Finalize methods are called at the completion of a garbage collection on objects that the GC has determined to be garbage. This means that the memory for these objects cannot be reclaimed right away because the Finalize method might execute code that accesses a field. Because a finalizable object must survive the collection, it gets promoted to another generation, forcing the object to live much longer than it should. This is not ideal in terms of memory consumption and is why you should avoid finalization when possible. To make matters worse, when finalizable objects get promoted, any object referred to by its fields also get promoted because they must continue to live too. So, try to avoid defining finalizable objects with reference type fields.

Furthermore, be aware of the fact that you have no control over when the Finalize method will execute. Finalize methods run when a garbage collection occurs, which may happen when your application requests more memory. Also, the CLR doesn’t make any guarantees as to the order in which Finalize methods are called. So, you should avoid writing a Finalize method that accesses other objects whose type defines a Finalize method; those other objects could have been finalized already. However, it is perfectly OK to access value type instances or reference type objects that do not define a Finalize method. You also need to be careful when calling static methods because these methods can internally access objects that have been finalized, causing the behavior of the static method to become unpredictable.

The CLR uses a special, high-priority dedicated thread to call Finalize methods to avoid some deadlock scenarios that could occur otherwise. 5 If a Finalize method blocks (for example, enters an infinite loop or waits for an object that is never signaled), this special thread can’t call any more Finalize methods. This is a very bad situation because the application will never be able to reclaim the memory occupied by the finalizable objects—the application will leak memory as long as it runs. If a Finalize method throws an unhandled exception, then the process terminates; there is no way to catch this exception.

So, as you can see, there are a lot of caveats related to Finalize methods and they must be used with caution. Specifically, they are designed for releasing native resources. To simplify working with them, it is highly recommended that developers avoid overriding Object’s Finalize method; instead, use helper classes that Microsoft now provides in the Framework Class Library (FCL). The helper classes override Finalize and add some special CLR magic I’ll talk about as we go on. You will then derive your own classes from the helper classes and inherit the CLR magic.

If you are creating a managed type that wraps a native resource, you should first derive a class from a special base class called System.Runtime.InteropServices.SafeHandle, which looks like the following (I’ve added comments in the methods to indicate what they do).

public abstract class SafeHandle : CriticalFinalizerObject, IDisposable {

// This is the handle to the native resource

protected IntPtr handle;

protected SafeHandle(IntPtr invalidHandleValue, Boolean ownsHandle) {

this.handle = invalidHandleValue;

// If ownsHandle is true, then the native resource is closed when

// this SafeHandle-derived object is collected

}

protected void SetHandle(IntPtr handle) {

this.handle = handle;

}

// You can explicitly release the resource by calling Dispose

// This is the IDisposable interface's Dispose method

public void Dispose() { Dispose(true); }

// The default Dispose implementation (shown here) is exactly what you want.

// Overriding this method is strongly discouraged.

protected virtual void Dispose(Boolean disposing) {

// The default implementation ignores the disposing argument.

// If resource already released, return

// If ownsHandle is false, return

// Set flag indicating that this resource has been released

// Call virtual ReleaseHandle method

// Call GC.SuppressFinalize(this) to prevent Finalize from being called

// If ReleaseHandle returned true, return

// If we get here, fire ReleaseHandleFailed Managed Debugging Assistant (MDA)

}

// The default Finalize implementation (shown here) is exactly what you want.

// Overriding this method is very strongly discouraged.

~SafeHandle() { Dispose(false); }

// A derived class overrides this method to implement the code that releases the resour

ce

protected abstract Boolean ReleaseHandle();

public void SetHandleAsInvalid() {

// Set flag indicating that this resource has been released

// Call GC.SuppressFinalize(this) to prevent Finalize from being called

}

public Boolean IsClosed {

get {

// Returns flag indicating whether resource was released

}

}

public abstract Boolean IsInvalid {

// A derived class overrides this property.

// The implementation should return true if the handle's value doesn't

// represent a resource (this usually means that the handle is 0 or -1)

get;

}

// These three methods have to do with security and reference counting;

// I'll talk about them at the end of this section

public void DangerousAddRef(ref Boolean success) {...}

public IntPtr DangerousGetHandle() {...}

public void DangerousRelease() {...}

}

The first thing to notice about the SafeHandle class is that it is derived from CriticalFinalizerObject, which is defined in the System.Runtime.ConstrainedExecution namespace. The CLR treats this class and classes derived from it in a very special manner. In particular, the CLR endows this class with three cool features:

-

The first time an object of any CriticalFinalizerObject-derived type is constructed, the CLR immediately JIT-compiles all of the Finalize methods in the inheritance hierarchy. Compiling these methods upon object construction guarantees that the native resource will be released when the object is determined to be garbage. Without this eager compiling of the Finalize method, it would be possible to allocate the native resource and use it, but not to get rid of it. Under low memory conditions, the CLR might not be able to find enough memory to compile the Finalize method, which would prevent it from executing, causing the native resource to leak. Or the resource might not be freed if the Finalize method contained code that referred to a type in another assembly, and the CLR failed to locate this other assembly.

-

The CLR calls the Finalize method of CriticalFinalizerObject-derived types after calling the Finalize methods of non–CriticalFinalizerObject-derived types. This ensures that managed resource classes that have a Finalize method can access CriticalFinalizerObject-derived objects within their Finalize methods successfully. For example, the FileStream class’s Finalize method can flush data from a memory buffer to an underlying disk with confidence that the disk file has not been closed yet.

-

The CLR calls the Finalize method of CriticalFinalizerObject-derived types if an AppDomain is rudely aborted by a host application (such as SQL Server or ASP.NET). This also is part of ensuring that the native resource is released even in a case in which a host application no longer trusts the managed code running inside of it.

The second thing to notice about SafeHandle is that the class is abstract; it is expected that another class will be derived from SafeHandle, and this class will provide a constructor that invokes the protected constructor, the abstract method ReleaseHandle, and the abstract IsInvalid property get accessor method.

Most native resources are manipulated with handles (32-bit values on a 32-bit system and 64-bit values on a 64-bit system). So the SafeHandle class defines a protected IntPtr field called handle. In Windows, most handles are invalid if they have a value of 0 or -1. The Microsoft.Win32.SafeHandles namespace contains another helper class called SafeHandleZeroOrMinusOneIsInvalid, which looks like this.

public abstract class SafeHandleZeroOrMinusOneIsInvalid : SafeHandle {

protected SafeHandleZeroOrMinusOneIsInvalid(Boolean ownsHandle)

: base(IntPtr.Zero, ownsHandle) {

}

public override Boolean IsInvalid {

get {

if (base.handle == IntPtr.Zero) return true;

if (base.handle == (IntPtr) (-1)) return true;

return false;

}

}

}

Again, you’ll notice that the SafeHandleZeroOrMinusOneIsInvalid class is abstract, and therefore, another class must be derived from this one to override the protected constructor and the abstract method ReleaseHandle. The .NET Framework provides just a few public classes derived from SafeHandleZeroOrMinusOneIsInvalid, including SafeFileHandle, SafeRegistryHandle, SafeWaitHandle, and SafeMemoryMappedViewHandle. Here is what the SafeFileHandle class looks like.

public sealed class SafeFileHandle : SafeHandleZeroOrMinusOneIsInvalid {

public SafeFileHandle(IntPtr preexistingHandle, Boolean ownsHandle)

: base(ownsHandle) {

base.SetHandle(preexistingHandle);

}

protected override Boolean ReleaseHandle() {

// Tell Windows that we want the native resource closed.

return Win32Native.CloseHandle(base.handle);

}

}

The SafeWaitHandle class is implemented similarly to the SafeFileHandle class just shown. The only reason why there are different classes with similar implementations is to achieve type safety; the compiler won’t let you use a file handle as an argument to a method that expects a wait handle, and vice versa. The SafeRegistryHandle class’s ReleaseHandle method calls the Win32 RegCloseKey function.

It would be nice if the .NET Framework included additional classes that wrap various native resources. For example, one could imagine classes such as SafeProcessHandle, SafeThreadHandle, SafeTokenHandle, SafeLibraryHandle (its ReleaseHandle method would call the Win32 FreeLibrary function), SafeLocalAllocHandle (its ReleaseHandle method would call the Win32 LocalFree function), and so on.

All of the classes just listed (and more) actually do ship with the Framework Class Library (FCL). However, these classes are not publicly exposed; they are all internal to the assemblies that define them. Microsoft didn’t expose these classes publicly because they didn’t want to document them and do full testing of them. However, if you need any of these classes for your own work, I’d recommend that you use a tool such as ILDasm.exe or some IL decompiler tool to extract the code for these classes and integrate that code into your own project’s source code. All of these classes are trivial to implement, and writing them yourself from scratch would also be quite easy.

The SafeHandle-derived classes are extremely useful because they ensure that the native resource is freed when a GC occurs. In addition to what we’ve already discussed, SafeHandle offers two more capabilities. First, the CLR gives SafeHandle-derived types special treatment when used in scenarios in which you are interoperating with native code. For example, let’s examine the following code.

using System;

using System.Runtime.InteropServices;

using Microsoft.Win32.SafeHandles;

internal static class SomeType {

[DllImport("Kernel32", CharSet=CharSet.Unicode, EntryPoint="CreateEvent")]

// This prototype is not robust

private static extern IntPtr CreateEventBad(

IntPtr pSecurityAttributes, Boolean manualReset, Boolean initialState, String name);

// This prototype is robust

[DllImport("Kernel32", CharSet=CharSet.Unicode, EntryPoint="CreateEvent")]

private static extern SafeWaitHandle CreateEventGood(

IntPtr pSecurityAttributes, Boolean manualReset, Boolean initialState, String name);

public static void SomeMethod() {

IntPtr handle = CreateEventBad(IntPtr.Zero, false, false, null);

SafeWaitHandle swh = CreateEventGood(IntPtr.Zero, false, false, null);

}

}

You’ll notice that the CreateEventBad method is prototyped as returning an IntPtr, which will return the handle back to managed code; however, interoperating with native code this way is not robust. You see, after CreateEventBad is called (which creates the native event resource), it is possible that a ThreadAbortException could be thrown prior to the handle being assigned to the handle variable. In the rare cases when this would happen, the managed code would leak the native resource. The only way to get the event closed is to terminate the whole process.

The SafeHandle class fixes this potential resource leak. Notice that the CreateEventGood method is prototyped as returning a SafeWaitHandle (instead of an IntPtr). When CreateEventGood is called, the CLR calls the Win32 CreateEvent function. As the CreateEvent function returns to managed code, the CLR knows that SafeWaitHandle is derived from SafeHandle, causing the CLR to automatically construct an instance of the SafeWaitHandle class on the managed heap, passing in the handle value returned from CreateEvent. The constructing of the SafeWaitHandle object and the assignment of the handle happen in native code now, which cannot be interrupted by a ThreadAbortException. Now, it is impossible for managed code to leak this native resource. Eventually, the SafeWaitHandle object will be garbage collected and its Finalize method will be called, ensuring that the resource is released.

One last feature of SafeHandle-derived classes is that they prevent someone from trying to exploit a potential security hole. The problem is that one thread could be trying to use a native resource while another thread tries to free the resource. This could manifest itself as a handle-recycling exploit. The SafeHandle class prevents this security vulnerability by using reference counting. Internally, the SafeHandle class defines a private field that maintains a count. When a SafeHandle-derived object is set to a valid handle, the count is set to 1. Whenever a SafeHandle-derived object is passed as an argument to a native method, the CLR knows to automatically increment the counter. Likewise, when the native method returns to managed code, the CLR knows to decrement the counter. For example, you would prototype the Win32 SetEvent function as follows.

[DllImport("Kernel32", ExactSpelling=true)]

private static extern Boolean SetEvent(SafeWaitHandle swh);

Now when you call this method passing in a reference to a SafeWaitHandle object, the CLR will increment the counter just before the call and decrement the counter just after the call. Of course, the manipulation of the counter is performed in a thread-safe fashion. How does this improve security? Well, if another thread tries to release the native resource wrapped by the SafeHandle object, the CLR knows that it cannot actually release it because the resource is being used by a native function. When the native function returns, the counter is decremented to 0, and the resource will be released.

If you are writing or calling code to manipulate a handle as an IntPtr, you can access it out of a SafeHandle object, but you should manipulate the reference counting explicitly. You accomplish this via SafeHandle’s DangerousAddRef and DangerousRelease methods. You gain access to the raw handle via the DangerousGetHandle method.

I would be remiss if I didn’t mention that the System.Runtime.InteropServices namespace also defines a CriticalHandle class. This class works exactly as the SafeHandle class in all ways except that it does not offer the reference-counting feature. The CriticalHandle class and the classes derived from it sacrifice security for better performance when you use it (because counters don’t get manipulated). As does SafeHandle, the CriticalHandle class has two types derived from it: CriticalHandleMinusOneIsInvalid and CriticalHandleZeroOrMinusOneIsInvalid. Because Microsoft favors a more secure system over a faster system, the class library includes no types derived from either of these two classes. For your own work, I would recommend that you use CriticalHandle-derived types only if performance is an issue. If you can justify reducing security, you can switch to a CriticalHandle-derived type.

Using a Type That Wraps a Native Resource

Now that you know how to define a SafeHandle-derived class that wraps a native resource, let’s take a look at how a developer uses it. Let’s start by talking about the common System.IO.FileStream class. The FileStream class offers the ability to open a file, read bytes from the file, write bytes to the file, and close the file. When a FileStream object is constructed, the Win32 CreateFile function is called, the returned handle is saved in a SafeFileHandle object, and a reference to this object is maintained via a private field in the FileStream object. The FileStream class also offers several additional properties (such as Length, Position, CanRead) and methods (such as Read, Write, Flush).

Let’s say that you want to write some code that creates a temporary file, writes some bytes to the file, and then deletes the file. You might start writing the code like this.

using System;

using System.IO;

public static class Program {

public static void Main() {

// Create the bytes to write to the temporary file.

Byte[] bytesToWrite = new Byte[] { 1, 2, 3, 4, 5 };

// Create the temporary file.

FileStream fs = new FileStream("Temp.dat", FileMode.Create);

// Write the bytes to the temporary file.

fs.Write(bytesToWrite, 0, bytesToWrite.Length);

// Delete the temporary file.

File.Delete("Temp.dat"); // Throws an IOException

}

}

Unfortunately, if you build and run this code, it might work, but most likely it won’t. The problem is that the call to File’s static Delete method requests that Windows delete a file while it is still open. So Delete throws a System.IO.IOException exception with the following string message: The process cannot access the file “Temp.dat” because it is being used by another process.

Be aware that in some cases, the file might actually be deleted! If another thread somehow caused a garbage collection to start after the call to Write and before the call to Delete, the FileStream’s SafeFileHandle field would have its Finalize method called, which would close the file and allow Delete to work. The likelihood of this situation is extremely rare, however, and therefore the previous code will fail more than 99 percent of the time.

Classes that allow the consumer to control the lifetime of native resources it wraps implement the IDisposable interface, which looks like this.

public interface IDisposable {

void Dispose();

}

Fortunately, the FileStream class implements the IDisposable interface and its implementation internally calls Dispose on the FileStream object’s private SafeFileHandle field. Now, we can modify our code to explicitly close the file when we want to as opposed to waiting for some GC to happen in the future. Here’s the corrected source code.

using System;

using System.IO;

public static class Program {

public static void Main() {

// Create the bytes to write to the temporary file.

Byte[] bytesToWrite = new Byte[] { 1, 2, 3, 4, 5 };

// Create the temporary file.

FileStream fs = new FileStream("Temp.dat", FileMode.Create);

// Write the bytes to the temporary file.

fs.Write(bytesToWrite, 0, bytesToWrite.Length);

// Explicitly close the file when finished writing to it.

fs.Dispose();

// Delete the temporary file.

File.Delete("Temp.dat"); // This always works now.

}

}

Now, when File’s Delete method is called, Windows sees that the file isn’t open and successfully deletes it.

Keep in mind that calling Dispose is not required to guarantee native resource cleanup. Native resource cleanup will always happen eventually; calling Dispose lets you control when that cleanup happens. Also, calling Dispose does not delete the managed object from the managed heap. The only way to reclaim memory in the managed heap is for a garbage collection to kick in. This means you can still call methods on the managed object even after you dispose of any native resources it may have been using

The following code calls the Write method after the file is closed, attempting to write more bytes to the file. Obviously, the bytes can’t be written, and when the code executes, the second call to the Write method throws a System.ObjectDisposedException exception with the following string message: Cannot access a closed file.

using System;

using System.IO;

public static class Program {

public static void Main() {

// Create the bytes to write to the temporary file.

Byte[] bytesToWrite = new Byte[] { 1, 2, 3, 4, 5 };

// Create the temporary file.

FileStream fs = new FileStream("Temp.dat", FileMode.Create);

// Write the bytes to the temporary file.

fs.Write(bytesToWrite, 0, bytesToWrite.Length);

// Explicitly close the file when finished writing to it.

fs.Dispose();

// Try to write to the file after closing it.

fs.Write(bytesToWrite, 0, bytesToWrite.Length); // Throws ObjectDisposedException

// Delete the temporary file.

File.Delete("Temp.dat");

}

}

Note that no memory corruption occurs here because the memory for the FileStream object still exists in the managed heap; it’s just that the object can’t successfully execute its methods after it is explicitly disposed.

The previous code examples show how to explicitly call a type’s Dispose method. If you decide to call Dispose explicitly, I highly recommend that you place the call in an exception-handling finally block. This way, the cleanup code is guaranteed to execute. So it would be better to write the previous code example as follows.

using System;

using System.IO;

public static class Program {

public static void Main() {

// Create the bytes to write to the temporary file.

Byte[] bytesToWrite = new Byte[] { 1, 2, 3, 4, 5 };

// Create the temporary file.

FileStream fs = new FileStream("Temp.dat", FileMode.Create);

try {

// Write the bytes to the temporary file.

fs.Write(bytesToWrite, 0, bytesToWrite.Length);

}

finally {

// Explicitly close the file when finished writing to it.

if (fs != null) fs.Dispose();

}

// Delete the temporary file.

File.Delete("Temp.dat");

}

}

Adding the exception-handling code is the right thing to do, and you must have the diligence to do it. Fortunately, the C# language provides a using statement, which offers a simplified syntax that produces code identical to the code just shown. Here’s how the preceding code would be rewritten using C#’s using statement.

using System;

using System.IO;

public static class Program {

public static void Main() {

// Create the bytes to write to the temporary file.

Byte[] bytesToWrite = new Byte[] { 1, 2, 3, 4, 5 };

// Create the temporary file.

using (FileStream fs = new FileStream("Temp.dat", FileMode.Create)) {

// Write the bytes to the temporary file.

fs.Write(bytesToWrite, 0, bytesToWrite.Length);

}

// Delete the temporary file.

File.Delete("Temp.dat");

}

}

In the using statement, you initialize an object and save its reference in a variable. Then you access the variable via code contained inside using’s braces. When you compile this code, the compiler automatically emits the try and finally blocks. Inside the finally block, the compiler emits code to cast the object to an IDisposable and calls the Dispose method. Obviously, the compiler allows the using statement to be used only with types that implement the IDisposable interface.

An Interesting Dependency Issue

The System.IO.FileStream type allows the user to open a file for reading and writing. To improve performance, the type’s implementation makes use of a memory buffer. Only when the buffer fills does the type flush the contents of the buffer to the file. A FileStream supports the writing of bytes only. If you want to write characters and strings, you can use a System.IO.StreamWriter, as is demonstrated in the following code.

FileStream fs = new FileStream("DataFile.dat", FileMode.Create);

StreamWriter sw = new StreamWriter(fs);

sw.Write("Hi there");

// The following call to Dispose is what you should do.

sw.Dispose();

// NOTE: StreamWriter.Dispose closes the FileStream;

// the FileStream doesn't have to be explicitly closed.

Notice that the StreamWriter’s constructor takes a reference to a Stream object as a parameter, allowing a reference to a FileStream object to be passed as an argument. Internally, the StreamWriter object saves the Stream’s reference. When you write to a StreamWriter object, it internally buffers the data in its own memory buffer. When the buffer is full, the StreamWriter object writes the data to the Stream.

When you’re finished writing data via the StreamWriter object, you should call Dispose. (Because the StreamWriter type implements the IDisposable interface, you can also use it with C#’s using statement.) This causes the StreamWriter object to flush its data to the Stream object and close the Stream object.7

What do you think would happen if there were no code to explicitly call Dispose? Well, at some point, the garbage collector would correctly detect that the objects were garbage and finalize them. But the garbage collector doesn’t guarantee the order in which objects are finalized. So if the FileStream object were finalized first, it would close the file. Then when the StreamWriter object was finalized, it would attempt to write data to the closed file, throwing an exception. If, on the other hand, the StreamWriter object were finalized first, the data would be safely written to the file.

How was Microsoft to solve this problem? Making the garbage collector finalize objects in a specific order would have been impossible because objects could contain references to each other, and there would be no way for the garbage collector to correctly guess the order in which to finalize these objects. Here is Microsoft’s solution: the StreamWriter type does not support finalization, and therefore it never flushes data in its buffer to the underlying FileStream object. This means that if you forget to explicitly call Dispose on the StreamWriter object, data is guaranteed to be lost. Microsoft expects developers to see this consistent loss of data and fix the code by inserting an explicit call to Dispose.

Other GC Features for Use with Native Resources

Sometimes, a native resource consumes a lot of memory, but the managed object wrapping that resource occupies very little memory. The quintessential example of this is the bitmap. A bitmap can occupy several megabytes of native memory, but the managed object is tiny because it contains only an HBITMAP (a 4-byte or 8-byte value). From the CLR’s perspective, a process could allocate hundreds of bitmaps (using little managed memory) before performing a collection. But if the process is manipulating many bitmaps, the process’s memory consumption will grow at a phenomenal rate. To fix this situation, the GC class offers the following two static methods.

public static void AddMemoryPressure(Int64 bytesAllocated); public static void RemoveMemoryPressure(Int64 bytesAllocated);

A class that wraps a potentially large native resource should use these methods to give the garbage collector a hint as to how much memory is really being consumed. Internally, the garbage collector monitors this pressure, and when it gets high, a garbage collection is forced.

There are some native resources that are fixed in number. For example, Windows formerly had a restriction that it could create only five device contexts. There had also been a restriction on the number of files that an application could open. Again, from the CLR’s perspective, a process could allocate hundreds of objects (that use little memory) before performing a collection. But if the number of these native resources is limited, attempting to use more than are available will typically result in exceptions being thrown.

To fix this situation, the System.Runtime.InteropServices namespace offers the HandleCollector class.

public sealed class HandleCollector {

public HandleCollector(String name, Int32 initialThreshold);

public HandleCollector(String name, Int32 initialThreshold, Int32 maximumThreshold);

public void Add();

public void Remove();

public Int32 Count { get; }

public Int32 InitialThreshold { get; }

public Int32 MaximumThreshold { get; }

public String Name { get; }

}

A class that wraps a native resource that has a limited quantity available should use an instance of this class to give the garbage collector a hint as to how many instances of the resource are really being consumed. Internally, this class object monitors the count, and when it gets high, a garbage collection is forced.

Here is some code that demonstrates the use and effect of the memory pressure methods and the HandleCollector class.

using System;

using System.Runtime.InteropServices;

public static class Program {

public static void Main() {

MemoryPressureDemo(0); // 0 causes infrequent GCs

MemoryPressureDemo(10 * 1024 * 1024); // 10MB causes frequent GCs

HandleCollectorDemo();

}

private static void MemoryPressureDemo(Int32 size) {

Console.WriteLine();

Console.WriteLine("MemoryPressureDemo, size={0}", size);

// Create a bunch of objects specifying their logical size

for (Int32 count = 0; count < 15; count++) {

new BigNativeResource(size);

}

// For demo purposes, force everything to be cleaned-up

GC.Collect();

}

private sealed class BigNativeResource {

private readonly Int32 m_size;

public BigNativeResource(Int32 size) {

m_size = size;

// Make the GC think the object is physically bigger

if (m_size > 0) GC.AddMemoryPressure(m_size);

Console.WriteLine("BigNativeResource create.");

}

~BigNativeResource() {

// Make the GC think the object released more memory

if (m_size > 0) GC.RemoveMemoryPressure(m_size);

Console.WriteLine("BigNativeResource destroy.");

}

}

private static void HandleCollectorDemo() {

Console.WriteLine();

Console.WriteLine("HandleCollectorDemo");

for (Int32 count = 0; count < 10; count++) new LimitedResource();

// For demo purposes, force everything to be cleaned-up

GC.Collect();

}

private sealed class LimitedResource {

// Create a HandleCollector telling it that collections should

// occur when two or more of these objects exist in the heap

private static readonly HandleCollector s_hc = new HandleCollector("LimitedResource"

, 2);

public LimitedResource() {

// Tell the HandleCollector a LimitedResource has been added to the heap

s_hc.Add();

Console.WriteLine("LimitedResource create. Count={0}", s_hc.Count);

}

~LimitedResource() {

// Tell the HandleCollector a LimitedResource has been removed from the heap

s_hc.Remove();

Console.WriteLine("LimitedResource destroy. Count={0}", s_hc.Count);

}

}

}

If you compile and run the preceding code, your output will be similar to the following output.

MemoryPressureDemo, size=0 BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. MemoryPressureDemo, size=10485760 BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource create. BigNativeResource create. BigNativeResource create. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. BigNativeResource destroy. HandleCollectorDemo LimitedResource create. Count=1 LimitedResource create. Count=2 LimitedResource create. Count=3 LimitedResource destroy. Count=3 LimitedResource destroy. Count=2 LimitedResource destroy. Count=1 LimitedResource create. Count=1 LimitedResource create. Count=2 LimitedResource create. Count=3 LimitedResource destroy. Count=2 LimitedResource create. Count=3 LimitedResource destroy. Count=3 LimitedResource destroy. Count=2 LimitedResource destroy. Count=1 LimitedResource create. Count=1 LimitedResource create. Count=2 LimitedResource create. Count=3 LimitedResource destroy. Count=2 LimitedResource destroy. Count=1 LimitedResource destroy. Count=0

Finalization Internals

On the surface, finalization seems pretty straightforward: you create an object and its Finalize method is called when it is collected. But after you dig in, finalization is more complicated than this.

When an application creates a new object, the new operator allocates the memory from the heap. If the object’s type defines a Finalize method, a pointer to the object is placed on the finalization list just before the type’s instance constructor is called. The finalization list is an internal data structure controlled by the garbage collector. Each entry in the list points to an object that should have its Finalize method called before the object’s memory can be reclaimed.

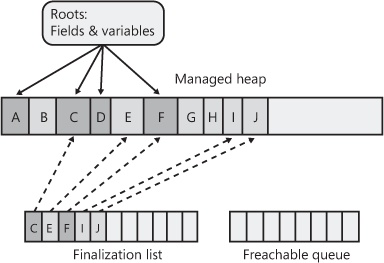

Figure 21-13 shows a heap containing several objects. Some of these objects are reachable from application roots, and some are not. When objects C, E, F, I, and J were created, the system detected that these objects’ types defined a Finalize method and so added references to these objects to the finalization list.

Figure 21-13 The managed heap showing pointers in its finalization list.

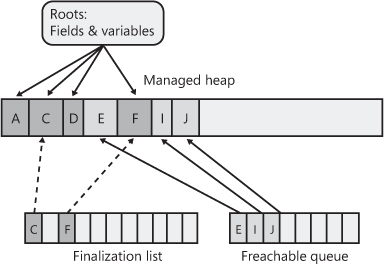

When a garbage collection occurs, objects B, E, G, H, I, and J are determined to be garbage. The garbage collector scans the finalization list looking for references to these objects. When a reference is found, the reference is removed from the finalization list and appended to the freachable queue. The freachable queue (pronounced “F-reachable”) is another of the garbage collector’s internal data structures. Each reference in the freachable queue identifies an object that is ready to have its Finalize method called. After the collection, the managed heap looks like Figure 21-14.

Figure 21-14 The managed heap showing pointers that moved from the finalization list to the freachable queue.

In this figure, you see that the memory occupied by objects B, G, and H has been reclaimed because these objects didn’t have a Finalize method. However, the memory occupied by objects E, I, and J couldn’t be reclaimed because their Finalize methods haven’t been called yet.

A special high-priority CLR thread is dedicated to calling Finalize methods. A dedicated thread is used to avoid potential thread synchronization situations that could arise if one of the application’s normal-priority threads were used instead. When the freachable queue is empty (the usual case), this thread sleeps. But when entries appear, this thread wakes, removes each entry from the queue, and then calls each object’s Finalize method. Because of the way this thread works, you shouldn’t execute any code in a Finalize method that makes any assumptions about the thread that’s executing the code. For example, avoid accessing thread-local storage in the Finalize method.

In the future, the CLR may use multiple finalizer threads. So you should avoid writing any code that assumes that Finalize methods will be called serially. With just one finalizer thread, there could be performance and scalability issues in the scenario in which you have multiple CPUs allocating finalizable objects but only one thread executing Finalize methods—the one thread might not be able to keep up with the allocations.

The interaction between the finalization list and the freachable queue is fascinating. First, I’ll tell you how the freachable queue got its name. Well, the “f” is obvious and stands for finalization; every entry in the freachable queue is a reference to an object in the managed heap that should have its Finalize method called. But the reachable part of the name means that the objects are reachable. To put it another way, the freachable queue is considered a root, just as static fields are roots. So a reference in the freachable queue keeps the object it refers to reachable and is not garbage.

In short, when an object isn’t reachable, the garbage collector considers the object to be garbage. Then when the garbage collector moves an object’s reference from the finalization list to the freachable queue, the object is no longer considered garbage and its memory can’t be reclaimed. When an object is garbage and then not garbage, we say that the object has been resurrected.

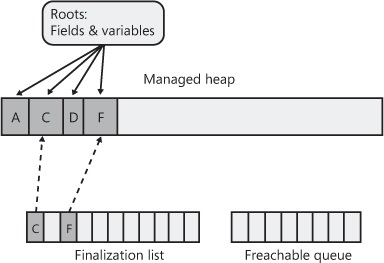

As freachable objects are marked, objects referred to by their reference type fields are also marked recursively; all these objects must get resurrected in order to survive the collection. At this point, the garbage collector has finished identifying garbage. Some of the objects identified as garbage have been resurrected. The garbage collector compacts the reclaimable memory, which promotes the resurrected object to an older generation (not ideal). And now, the special finalization thread empties the freachable queue, executing each object’s Finalize method.

The next time the garbage collector is invoked on the older generation, it will see that the finalized objects are truly garbage because the application’s roots don’t point to it and the freachable queue no longer points to it either. The memory for the object is simply reclaimed. The important point to get from all of this is that two garbage collections are required to reclaim memory used by objects that require finalization. In reality, more than two collections will be necessary because the objects get promoted to another generation. Figure 21-15 shows what the managed heap looks like after the second garbage collection.

Figure 21-15 Status of the managed heap after second garbage collection.