Agile Project Management with Kanban: Adapting from Waterfall

- 3/16/2015

Celebrating performance improvements

Even if your traditional Waterfall team takes easily to Kanban and likes the change, members may still question whether the change was worth it. After all, traditional Waterfall does work, and has likely worked for years. Your management probably has a similar concern: Was the effort to adopt Kanban worth the cost?

While each team has its own reasons, you likely adopted Kanban to increase agility and deliver more high-quality value to your customers in less time. The good news is that it’s straightforward to measure those outcomes. By measuring them from the start, and showing your team and management how your results improve over time, you’ll enhance everyone’s commitment to the change and boost morale and team pride in the process.

I’ll focus on two daily measurements and their moving averages to capture agility, productivity, and quality. I selected these particular measures—completed tasks and unresolved bugs—because they are easy to calculate for both Waterfall and Kanban, they have clear definitions, and they relate directly to agility, productivity, and quality. Here’s a breakdown of each measure:

|

Completed tasks |

Unresolved bugs |

|

|

Definition |

Count of the team’s tasks that completed validation that day with all issues resolved |

Count of the team’s unresolved bugs that day |

|

Concept measured |

Day-to-day agility |

Day-to-day bug debt |

|

Moving average captures |

Productivity |

Product quality |

|

Waterfall calculation |

Count the rough number of the team’s tasks in each spec that completed validation and had all issues resolved by the end of a milestone or release, and associate that count with the end date of the respective milestone or release. |

Extract the number of the team’s unresolved bugs by day from your bug-tracking system. |

|

Kanban calculation |

Count the number of the team’s tasks that completed the done rules for validation on each date. |

Extract the number of the team’s unresolved bugs by day from your bug-tracking system. |

|

Caveats |

Only measures productivity of task completion, not other daily activities or tasks without specifications. |

Only measures aspects of product quality captured in bugs, but doesn’t account for different types of bugs. |

Notes on these measures:

- If the size of your team varies dramatically, you should divide by the number of team members, resulting in completed tasks per team member and unresolved bugs per team member. Doing so makes the measures easier to compare across teams but less intuitive to management, in my experience.

- The completed tasks calculation for Waterfall bulks all the tasks at the end of each milestone or release because that’s the earliest date when the tasks are known to be validated with all issues resolved. However, if your Waterfall team associates all bugs directly with tasks, you can count tasks toward the day when their last bug was resolved. Getting this extra accuracy is nice, but it isn’t essential. In particular, the extra accuracy doesn’t matter for the moving average (the productivity measure), so long as you average over the length of your milestones.

- The moving average can be a 7-day average for measuring weekly productivity, a 30-day average for monthly productivity, or whatever length you want. To compare the productivity of Waterfall to Kanban, you should average over the length of your Waterfall milestones.

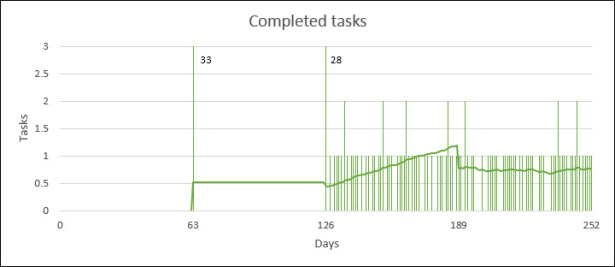

Figure 4-1 and Figure 4-2 are examples of plots of completed tasks and unresolved bugs over four nine-week periods (included in a downloadable worksheet; see the book’s introduction for more details). The first two periods are Waterfall milestones, split into six weeks of implementation and three weeks of validation. The second two periods are Kanban running continuously. The data is based on the team configuration I used as an example in Chapter 3, “Hitting deadlines.” I have the team working seven days a week (for simplicity, not punishment). Their pace of task work remains the same throughout all four periods, which is artificial but unbiased. (I do add a little random noise throughout to make the results a bit more realistic.)

FIGURE 4-1 Plot of completed tasks over four nine-week periods, with a superimposed running average.

FIGURE 4-2 Plot of unresolved bugs over four nine-week periods, with a superimposed running average.

Looking at the chart of completed tasks, all tasks are considered completed on the final day of validation during the first two Waterfall periods. The two columns of completed tasks from those periods go about 10 times the height of the chart. I’ve zoomed in to better view the nine-week moving average line measuring productivity. It starts at around 0.52 tasks per day (33 tasks / 63 days), increases to around 1.2 tasks per day as the results from the second Waterfall milestone combine with the Kanban results, and then settles to around 0.76 tasks per day as the steady stream of Kanban work establishes itself.

The improvement from 0.52 to 0.76 represents a 46 percent increase in productivity. That’s the equivalent to working five days and getting seven and a half days of tasks completed—it’s like you worked hard through the weekend and still got the weekend off. The day-to-day agility of Kanban is also immediately apparent, as work is completed daily instead of being delivered in bulk.

The unresolved bugs chart in Figure 4-2 displays a fairly typical Waterfall pattern of building up a bug backlog during the six-week implementation phase of the milestone and then resolving those bugs during the three-week validation phase. This shows as the two inverted V shapes, one for each Waterfall milestone. This simple example has a fairly uniform bug resolution rate during validation. In practice, bug resolution rates often fluctuate significantly as complicated issues are uncovered and some fixes regress old problems.

Once the Kanban period starts, there is a fairly steady stream of unresolved bugs, but it never builds up. Bugs are kept in check by the WIP limits and done rules. As a result, the nine-week moving average line measuring quality improves dramatically with Kanban. The product is always ready for production use and customer feedback.

Seeing these dramatic improvements in productivity and quality should be enough to warm the hearts of hardened cynics and skeptical managers. Sharing them weekly with your team and your management gives you all something to celebrate (in addition to the increased customer value you deliver).

What’s more, with Kanban, the quality level is steady throughout the product cycle, so the prolonged stabilization periods associated with traditional Waterfall are gone or, in large organizations, reduced to only two or three weeks of system-wide testing. Replacing months of stabilization with a few weeks at most yields more time for continuous product enhancements, making the measurable improvements in productivity and quality even more remarkable.

However, a month or two will pass before you begin to see great results. The cynics and skeptics on your team are bound to have questions. It’s time for the rude Q & A.